Background

I work at an IT services company (Simpragma) which aims to revolutionize Contact Centres with their automation expertise built over a decade. The company has built Voicebots, chatbots, social media messenger bots, visual IVR, etc for renowned brands where I have contributed as a user experience designer.

Overview

This project was done for a leading micro-finance company (we will use the name “B Finance”) which has over 5 million customers. They wanted to automate payment reminder calls as the task is repetitive in most cases. I would take you through the design process of the Collections Voicebot at a glance where some stats might be altered for confidentiality. As we had prior knowledge of B Finance’s customer base and had developed a voice bot for them earlier this project was more like a feature roll out to a small segment of customers first, test and improve for final launch. Specific things to keep in mind while going through any Ux case study.

- UX maturity of the design team at Simpragma.

- Duration for research and Ideation –2 weeks.

- Business impact on B Finance in terms of expenditure and ROI.

- Transition for B Finance’s customers from receiving calls from human customer service executive to an AI-ML-based voice bot.

Prior Knowledge

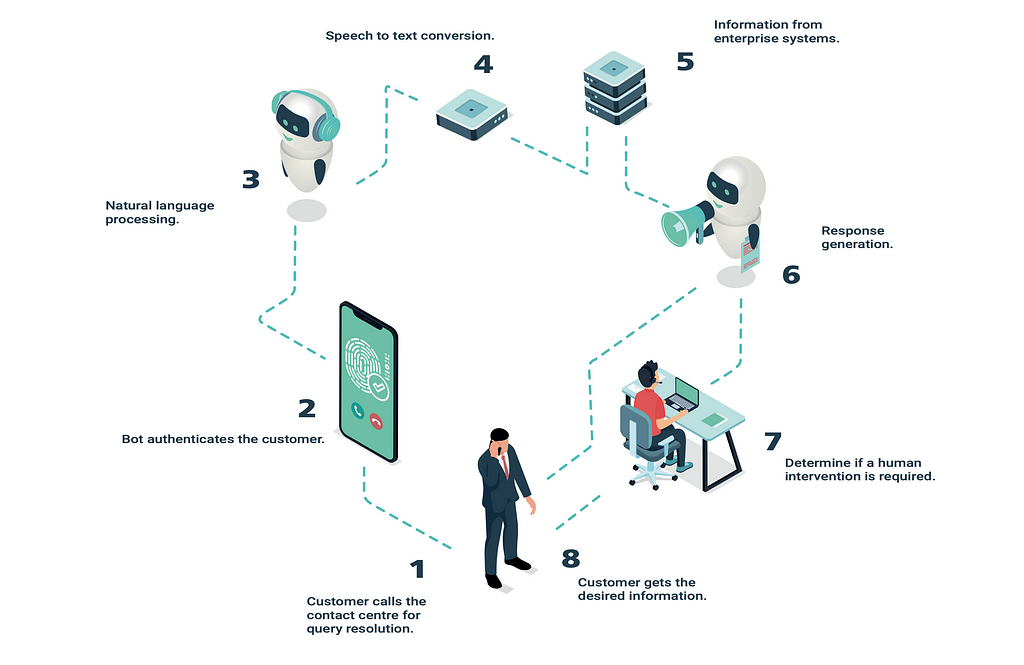

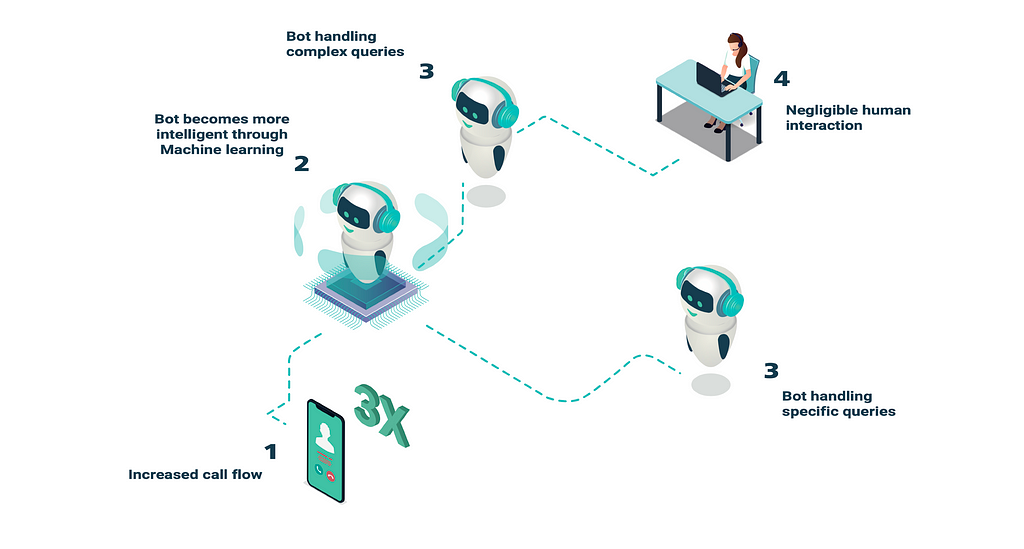

How does the Voice bot function

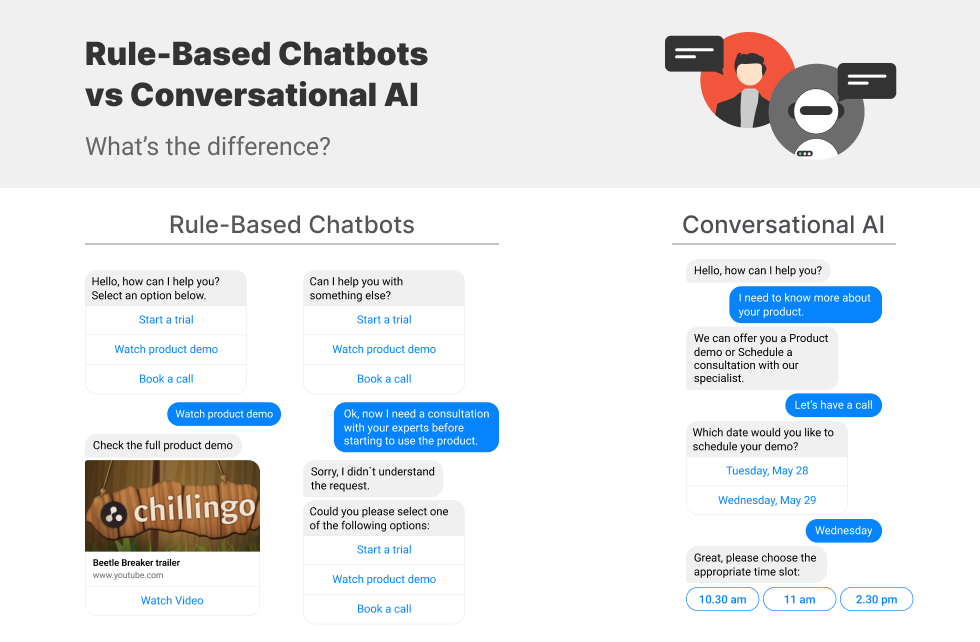

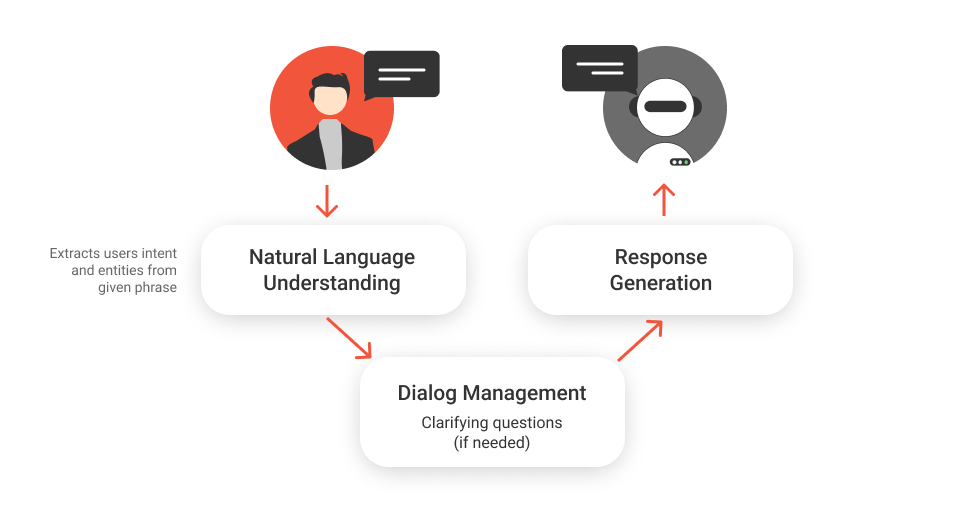

The voice bot uses ASR (automatic speech recognition) to convert speech-to-text ANd NLP (natural language processing) to understand human language. Then the response is generated using TTS (text-to-speech). The AI predicts the bot response from a predefined library and Machine learning helps to train the voice bot over time for complex scenarios.

For more information on the voice bot process, click on the links below:

- What is Voice bot: An Ultimate Guide for Voicebot AI

- Voicebots 101 Guide: applications, benefits, best practices – SentiOne

Earlier Project with B Finance -Inbound customer query resolution Automation

This was not a completely new project from scratch. Our team automated the inbound customer service calls for B Finance earlier and provides continuous support for upgrading workflows, scripts, intents, and any other issues. B Finance was able to answer approximately 5000 calls per day with 50 telephony lines and 22 agents and approximately 3000 calls were going unresolved. After automating the calls B finance scaled up 3 times easily as it did not require hiring and training too many agents. 80 percent of calls were handled by the voice bot and other unique cases would end up with human agents if needed. The voice bot was available 24*7 and 7 days a week as well. The voice bot is continuously trained through machine learning for handling more complex queries over time.

Customer Base

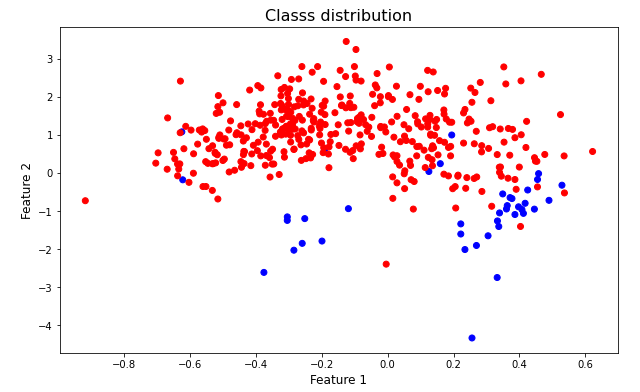

Doing a project with B Finance already gave us a lot of insight into their customer base. Most of the customers have taken small loans for products like mobile phones, home appliances, or other electrical gadgets. As the majority of users were located outside main cities:

- We understood customers’ level of literacy and understanding of technology.

- The majority of the customers speak Hindi in different styles.

- They would use different kinds of words for the same query. So, we were able to develop a library of intents (synonymous words) that can be recognized by the bot to understand the user’s query. For instance, some users might say Due EMI and some might say pending installment but it means the same. The bot could understand similarities and differences as well using such intents.

- A lot of users perceive voice bots as a human who can set higher expectations for getting their issues resolved. We make sure to build a human-like bot but not exactly like human customer care agents.

- As everyone has a different grasping speed, we tuned the bot to speak with an average speech rate, but some customers might still interrupt it. The voice bot was built to adapt, understand and answer to such customers as well.

- Some customers understood that it is not a real human and thus they demand to speak to one. If the customer is adamant, the voice bot transfers such calls to human agents. It would also transfer in some specified cases as well.

Project Brief

After getting the inbound calls automated, B Finance approached us for automating their outbound collection calls. Like any other Financial institution, B Finance generates revenues from the difference between what it lends and what it receives back in form of EMI (principal + interest). Regular payments ensure a fund balance for B finance and they can lend the money further to its new and existing customers. Thus collecting payments on time and reminding customers to pay is a crucial operation for B Finance.

Customers need to be reminded regularly about upcoming and overdue EMI payments. Especially if a customer hasn’t paid the EMI on time it affects their credit score and they have to pay penalty late payment charges post the grace period.

This project would cover 8 lac customers approximately with 300 calling lines every day.

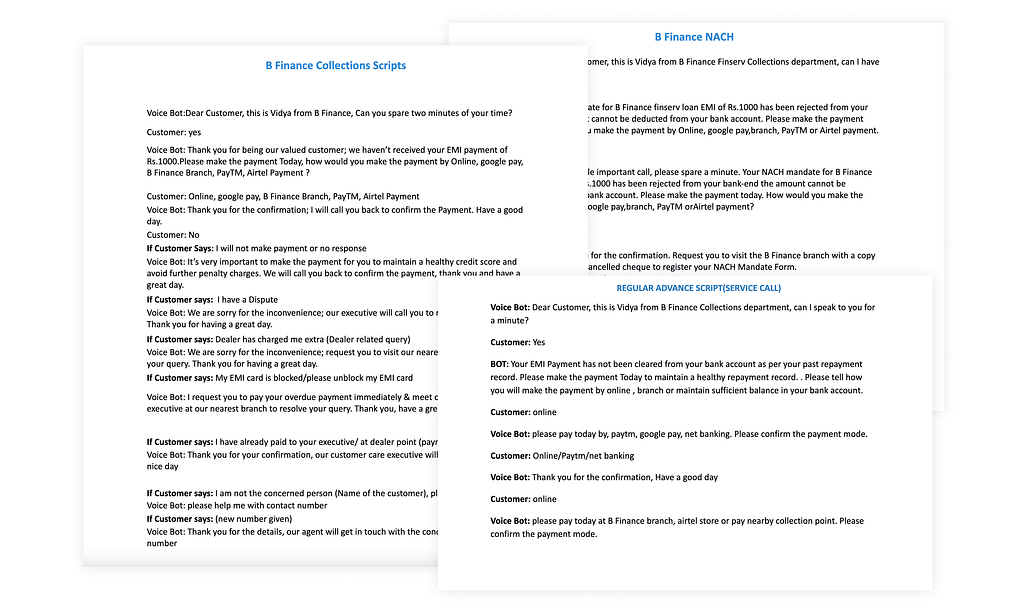

As the client was already operating payment reminders and due collections manually through human agents, they were able to give us data on different scenarios to be automated and scripts for the voice bot as well

These scripts are not finalized until we develop a minimal viable product for testing and improvements.

First Draft

Insights from the scripts

Scripts were not just dialogue between a bot and user but also gave what business required customers to be reminded about.

- Regular Payment Reminder– Reminding customers in advance about upcoming EMI payments.

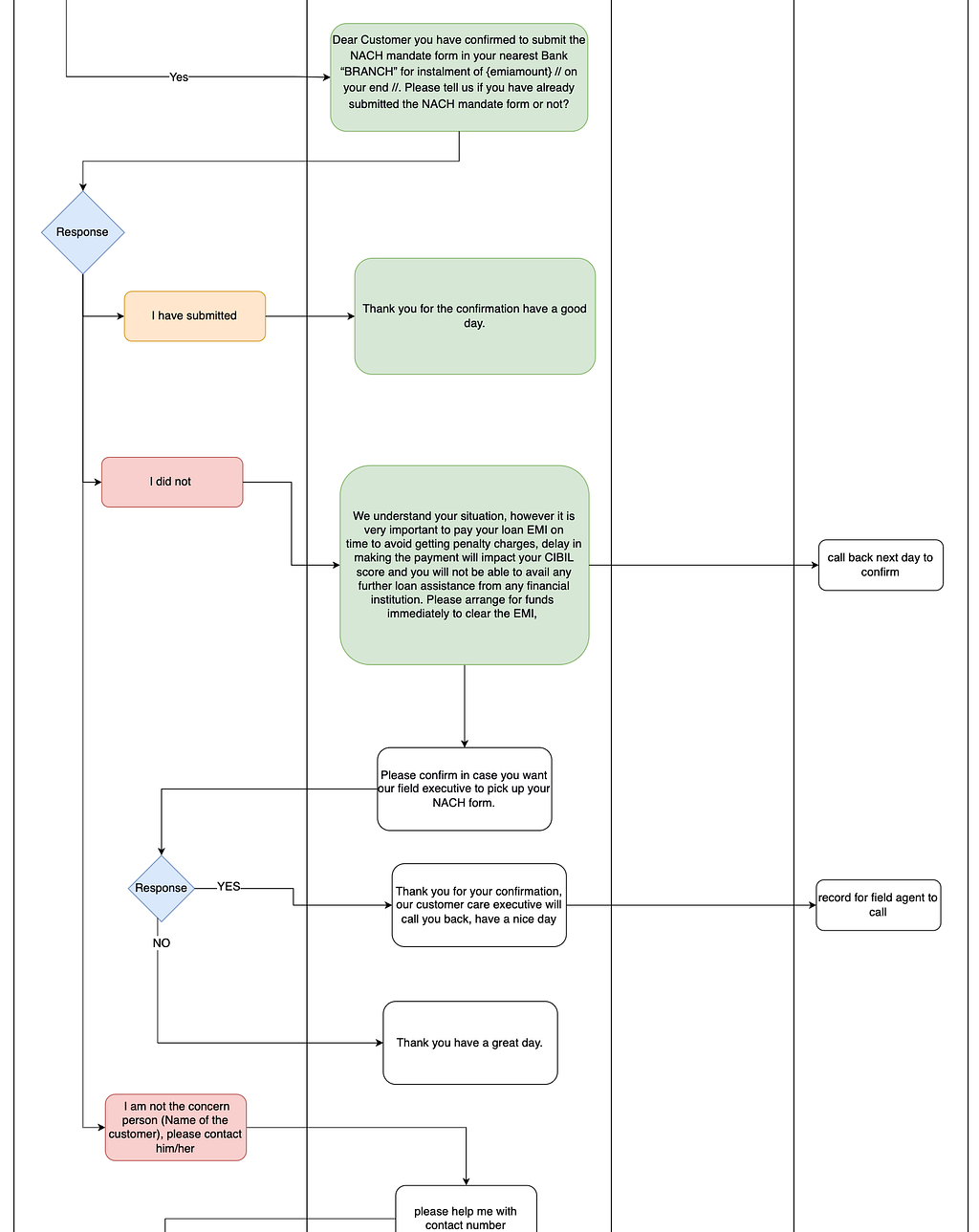

- NACH reminder– NACH is a facility given by banks in case they want to pay directly for EMI from Bank accounts. Sometimes customers forget to update NACH with their banks and have to be reminded before the due date to avoid late payments.

- Pending EMI reminder– In case a customer forgets to pay EMI on the due date, B Finance reminds them to pay to avoid penalties and negative effects on their Credit score.

- NACH update pending/failed– In case NACH did not get approved B Finance cannot debit from the customer’s bank account. Thus they are asked to pay by other methods.

- Smart Debit failed– In case NACH/smart debit was set up but customers’ accounts could not be debited due to low balance, either they can pay by other methods or add funds to the bank account as B Finance will re-attempt again in a few days.

- Advance Reminder Call– A bit overlapping but can be a necessary step for customers with bad payment history.

- Follow-ups– Scripts for follow-up calls were shared too.

- Disconnection– Script might change in case of a call disconnection due to network reasons.

- Customer declines/doesnt answer– Bot might have to be more assertive or might need a change of plan

Quick Secondary Research

In a B2B scenario, it wasn’t easy to have an understanding of a competitor’s product. After spending a couple of hours getting a demo from competitors I finally moved on to secondary research and came up with some crucial steps to follow for our MVP.

- Introduce Yourself Confirm Name/DOB etc (Identify correctly)

- Confirm Name/DOB etc (Identify correctly)

- Check availability of customer (good time to talk)

- Tell the intent of the call

- Empathize with customer

- Help the customer with ease of payment or alternatives if you can

- Inform about consequences like late payment charges etc.

- Inform the customer properly about the next steps

- Send necessary documents if required

- Give a contact number for the customer to call back in case

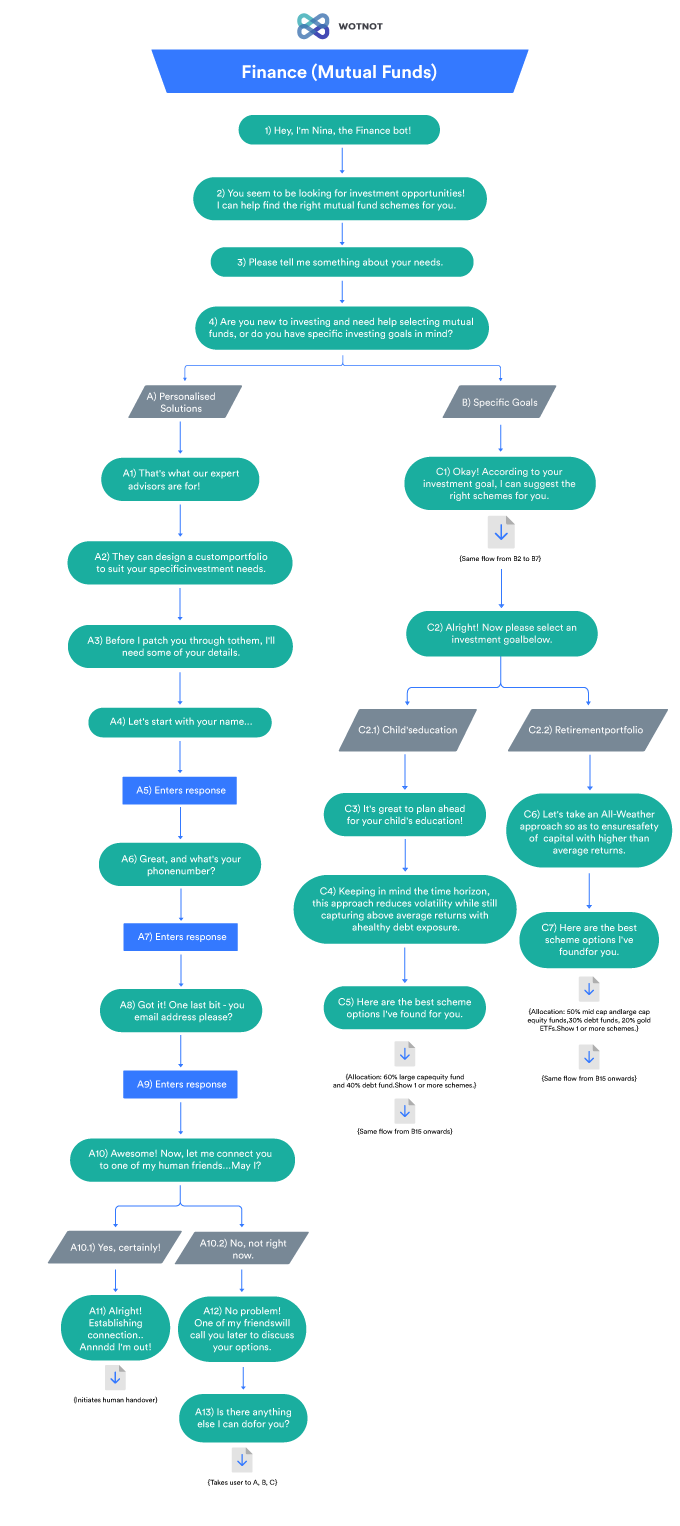

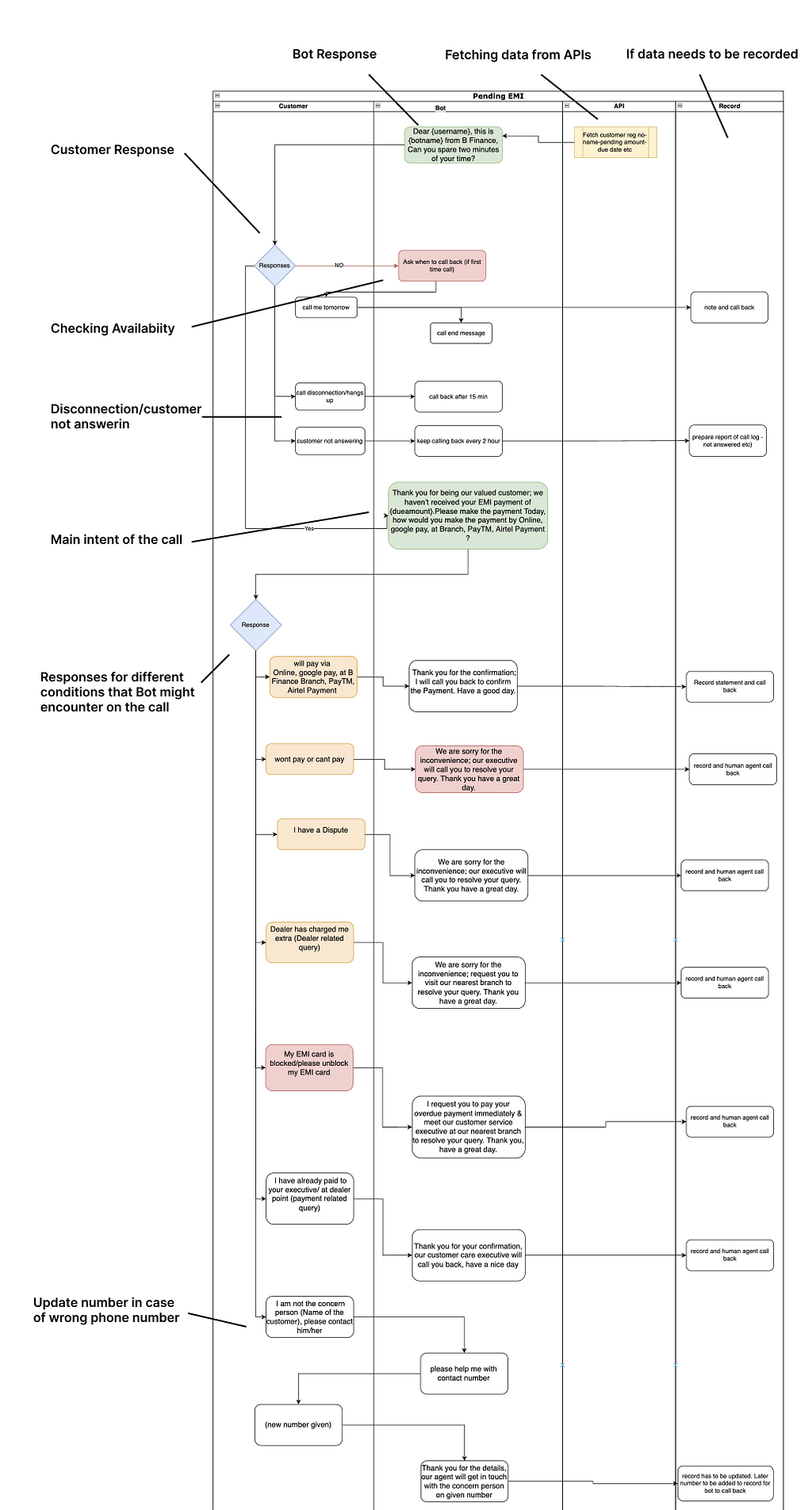

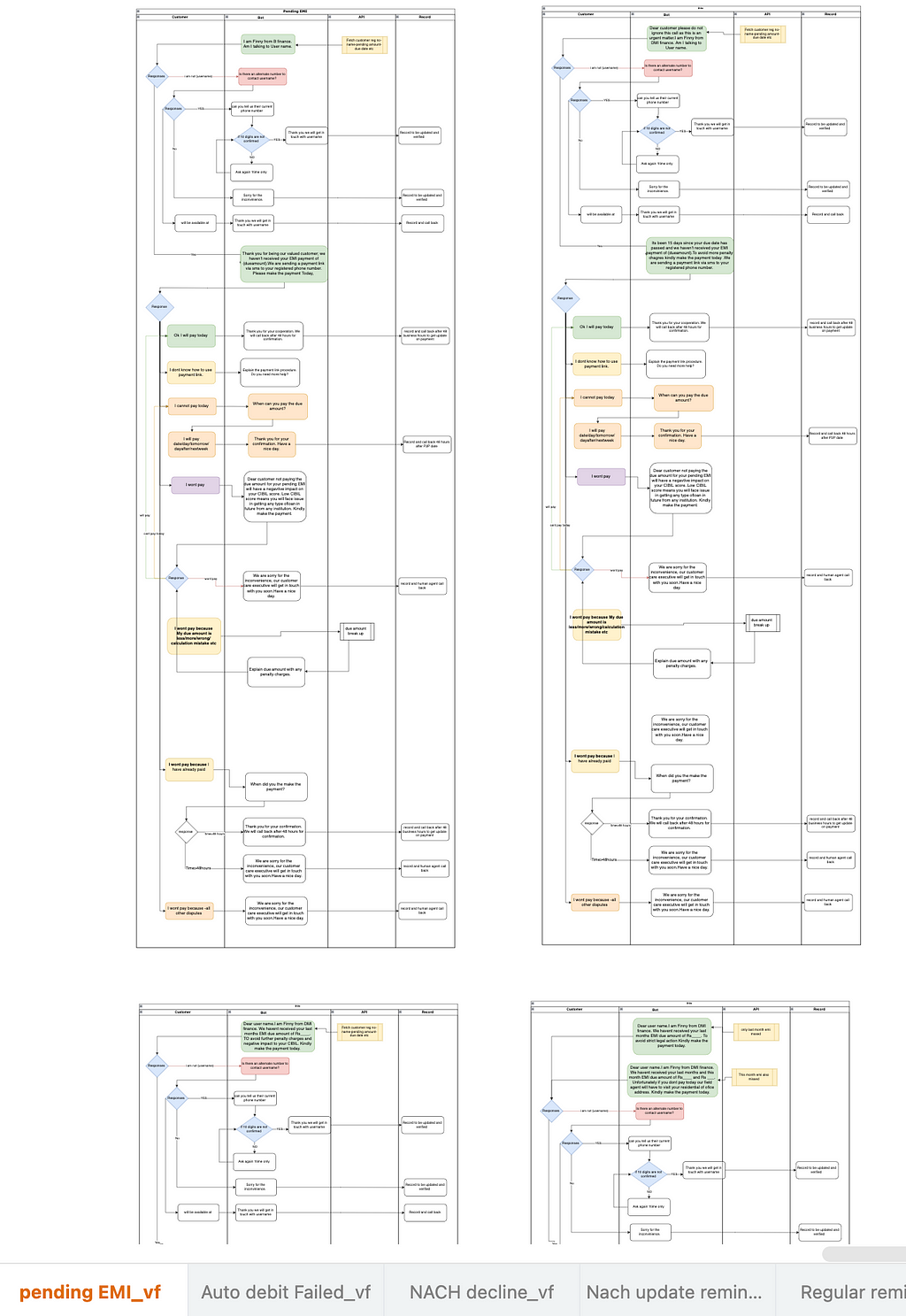

Initial Conversation Flow

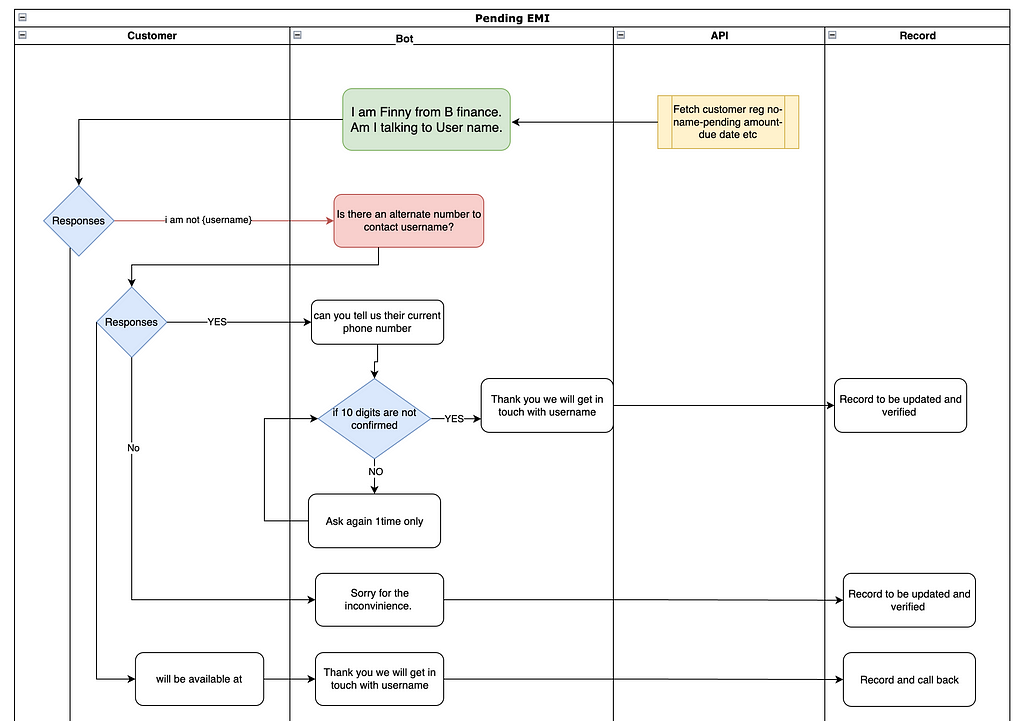

The initial flows were created for an initial conversation with the client. It is easier to have a conversational flow in place to understand voice bot and customer interaction for both the development team and client as well. It acts as a base for information architecture as well where the dev team can design the decision tree based on the flow in the later stage.

Here is a glimpse of one crucial flow of Pending EMI reminders out of the 6 flows that I created for discussion. The other flows have certain similarities as the nature of customer response was similar. It would give you an idea of what I presented to the client.

Some more cases were added along with each flow

- Follow up — in case customer doesn’t pay after reminder call as well. voice bot to call back and explain the consequences of delaying it.

- Network issue– Voice bot will call back after some time and apologize for the inconvenience caused.

- Customer not answering– If the customer responds after some time the voice bot will be more assertive and tell the customer to pay to the matter. In case a customer doesn’t answer at all, the call logs are maintained and the human agent tries to reach them.

For the current release, the phone lines are limited to 300 for Eight lac customers. If an average call takes 4 minutes to be completed and the number of operational hours is limited to 12 as per government guidelines. Voicebot will be able to call 43000 customers per day approximately.

In case, the due date had crossed the flow would be similar with the just intent message being changed. In case of general reminder, the flow would be like this

Client feedback

We had a quick call with the client and went through the flow together. We even did a small exercise of roleplay where one participant was a user and the other became the voice bot. A few important points that we discovered

- Checking availability was time-consuming and did not fall into the bracket of a ‘must do’ or ‘should do’ at this stage so it can be added later. The reminder call was also very crucial for businesses to generate revenue and as a priority for clients, we decided to skip this step.

2. We were updated with information about the mode of payment, customers need not visit a branch to make payments.

3. For any dispute with the dealer or if the customer has already paid etc, a human agent would call back if the voice bot is not able to resolve the issue.

I wanted some more insights from B finance’s customers but due to a time crunch, it wasn’t possible. We decided that I would analyze their reaction and behavior from the call recordings taken after the first release.

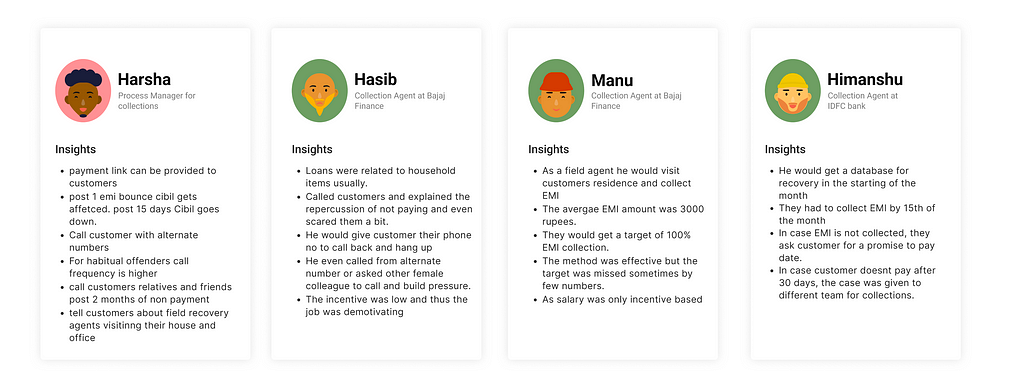

I still went ahead and did a quick qualitative study on agents and process manager working in Collections process for various companies.

Qualitative Insights

Questionnaire

- What is the collection process that you follow?

- How often do you call the customer?

- Is there a right time to call the customer?

- What is your tone while talking to customers

- What is the strategy to make the customer pay their dues?

- What is the repeat rate of defaulters? Are you able to check the history of late payments for one customer?

- What are the consequences of not paying on time?

- How do you deal with people who do not pay regularly?

Insights

- It is not easy to get users to pay as they come around with various excuses.

- Its human nature that agents might get aggressive or rude dealing with similar situations every day. There is pressure for meeting targets as their earnings are largely dependent on incentives.

- Financial institutions have incentive-based income plans for agents to avoid low competency as the revenue depends on EMI payments from customers.

- There is no hypothecation in microfinance loans where the annual income of households is low. The company cannot claim the items back from customers and neither it would serve a greater purpose after re-selling these products. Getting installments is the only way for growing the business.

- Agents might inform customers about the repercussions of having a low CIBIL score, but either user are unaware as they are from low-income groups or they are not bothered with low CIBIL scores as well.

- Agents go out of the way to collect EMI amounts from customers. There is no ideal way to date that can assure timely payments from all customers. Bad debt is a huge problem for financial institutions.

- Not ideal but agents call customers’ relatives and friends to build some pressure on customers as they might pay out of embarrassment. Similarly, they scare customers about recovery agents turning up at their office or residential addresses.

- The idea of embarrassing people does work somehow. It might be a societal thing as well and for that, we need more research which I might take in the next phase.

- The call frequency is higher and more assertive tones are used for habitual offenders.

- People block or avoid calls so agents might use alternative numbers.

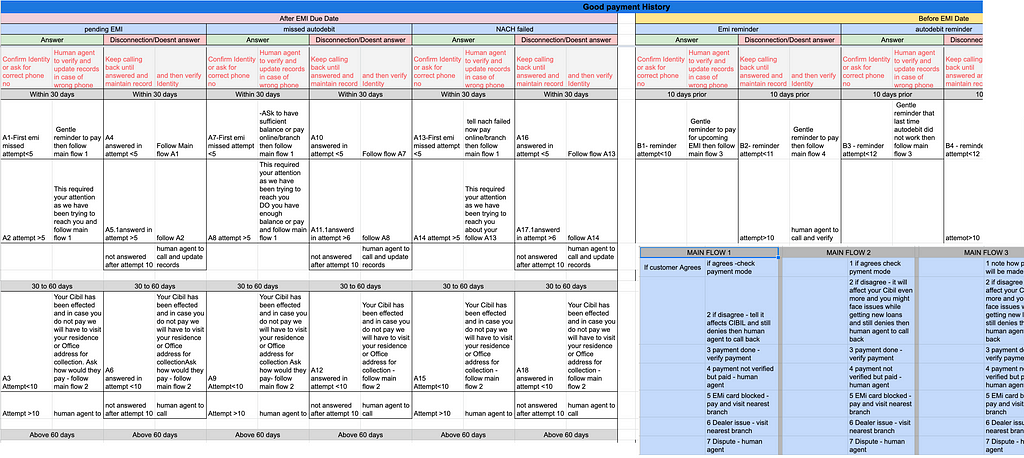

Selection Matrix

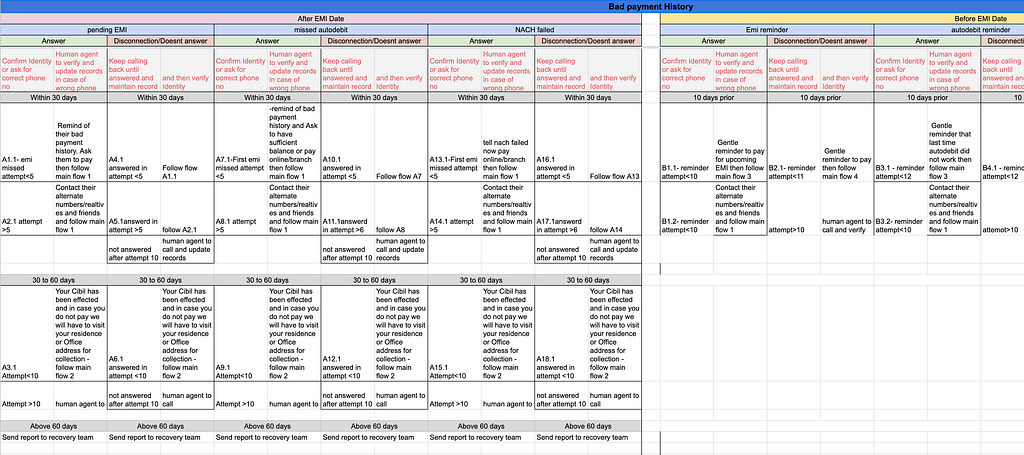

Based on the above insights I tried to map down the selection criteria for calling. There were various differentiators and categories to be followed like

- Customers with good payment history vs bad payment history: Voicebot would need to understand this to change the tone with help of a script.

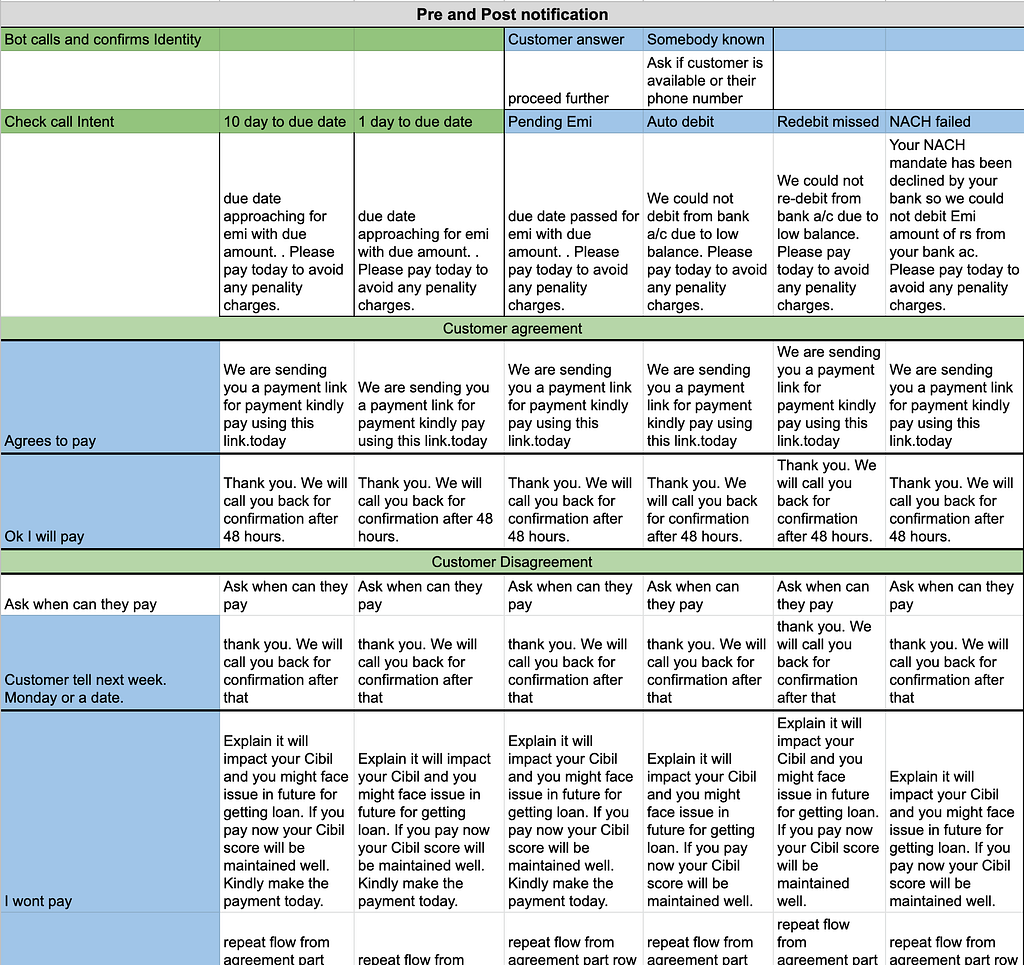

- Pre and post notification: voice bot needs to identify whether it is calling before or after the due date.

- Call Intent: Voicebot needs to inform the customer about the reason for the call like a general reminder for payment, pending EMI, missed auto-debit or NACH failed.

- The intensity of reminders: Customers need to be reminded and the ones who still have not paid might have to be reminded .

- Timeline: Intensity has to increase with timelines. It depends both on business requirements and voice bot availability.

- Identification: The customer needs to be identified properly before going ahead with the call else updating the records.

2nd Draft (Ideation)

So I started building flow according to the selection matrix where the intensity increases every 30 days if a customer doesn’t pay until 60 days and then goes to field recovery teams.

The call frequency also increased with time and in the case of call avoiders, human agents would reach back.

As the voice bot would have a certain limitation, human agents would take over in unique dispute cases, disagreements for payment, payment gateway issues, etc as it was too early for the voice bot to handle it.

While I was creating flows with different intensities of messages over time for defaulters, I did a quick check with my developers. I felt the selection matrix was overwhelming and there were too many flows to be followed for the prototype.

My assumption matched the developers’ thoughts as well and we concluded to mellow it down.

3rd Draft (Ideation)

For the prototype to be developed quickly with minimal effort yet good enough to be tested in a real environment with approximately 150 people I reduced the complicated matrix into a simple one.

After a lot of ideation, I was able to combine all flows except NACH reminder as it did not require the customer to tell for payment agreement or disagreement.

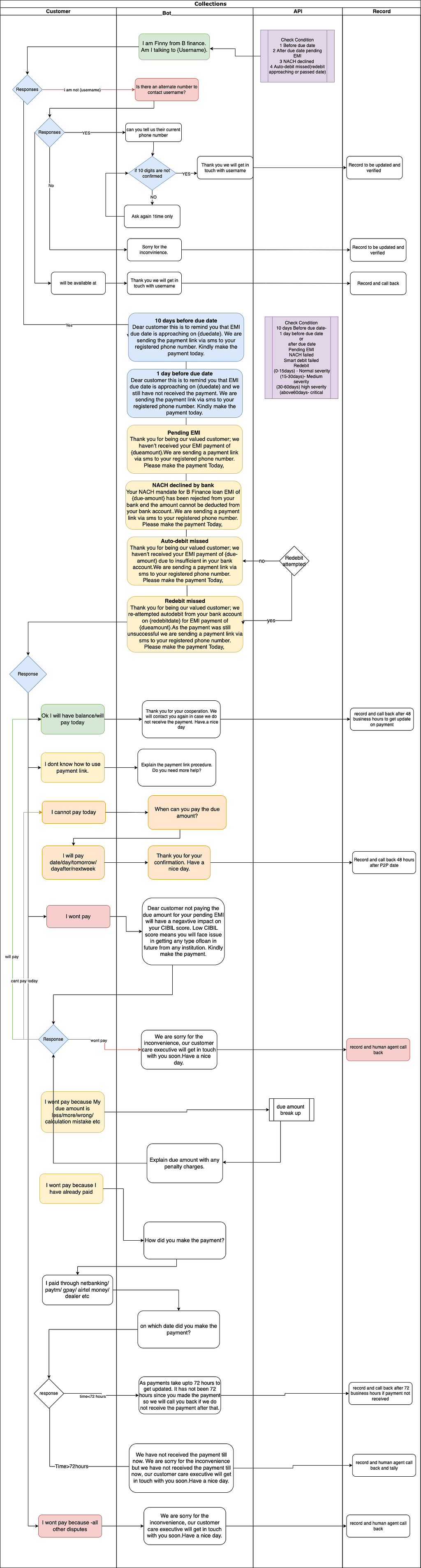

Flow for Development

The combined flow would be used to develop the prototype. We chose some must-do tasks to be taken care of for the prototype as all problems cant be solved at once.

- Voicebot will fetch customer data through an API to determine the due date Due amount and other necessary details.

- The voice bot would confirm if it speaking to the right customer, If not it would try to get the right phone number and record it. Later it would be manually verified and updated by human agents.

- Voicebot would then introduce the intent to the customer after checking the condition like Check Condition

10 days Before the due date-

1 day before the due date

or

After due date

Pending EMI

NACH failed

Smart debit failed

Redebit

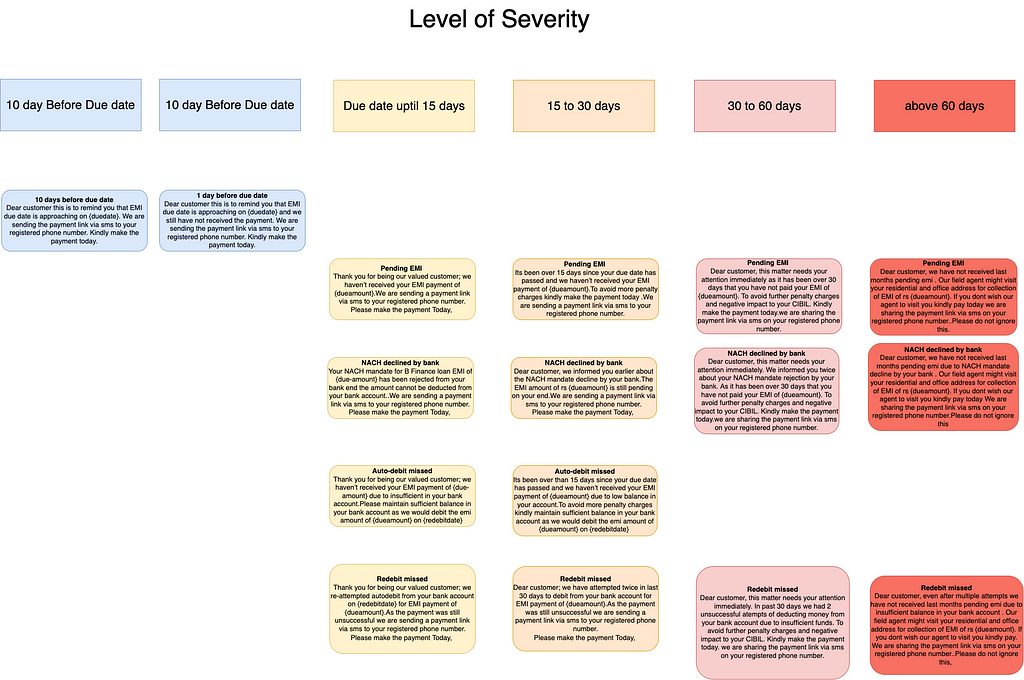

4. The script would get severe with time for customers who do not pay. For the current prototype, we removed the differentiator of good and base payment history as well.

(0–15 days) — Normal severity

(15–30 days)- Medium severity

(30–60days) high severity

(above60days- critical

5. Voicebot will give clarity of payable amount — Due amount +interest + late payment charges.

6. In case of any dispute where a customer does not agree to pay voice bot will end the call with a message; I.e. customer care agent will call back the customer.

Development of prototype

As we already have prior knowledge and some modules ready for the same client the development for the first release would take 4 to 6 weeks where I work closely with developers to take insights and note down gaps and opportunities.

Scripts and Intents

Scripts are generally improved over time with every release. Similarly, new intents(words told by a customer that the bot can use to understand their query) are continuously added to the library for training the voice bot.

Testing

Like every other contact center, we record and audit the calls along with an audit manager from the client side. We listen to 10 calls per day to understand what went right and what did not. Then we divide tasks according to tech issues or process issues and make improvements continuously.

The data we get from calls are also used to train the bot more every day for new intents and scripts for the voice bot are modified as well.

Next Release

Testing will give us insights for improvements for the next releases but we do have some key factors already to look at.

- Currently, the phone lines are limited to 300 and thus a customer might get a follow-up call only after 18 days. We need to increase phone lines or find a way to prioritize follow-up for regular violaters.

- We need to add and test for certain steps like ‘checking availability with customer ’ which we removed earlier.

- We need to personalize the calls and change scripts accordingly.

- Train bot for complex scenarios to reduce human agent intervention.

- Get qualitative insight from B Finance’s customers.

- In case a customer doesn’t answer should the voice bot use the alternate number to call back or call the customer’s friends and family to reach out?

- Should there be a ground team for collecting EMI offline?

- I will work on convincing people to pay. To date, offline physical recovery has worked best in case of bad debts. Empathetic user research with such customers will yield great insights.

- In case customers block one number what would be an alternate route to reach.

I will add the further progress of this project in the next case study. Stay tuned.

Ux Case study-Automating Outbound Collection calls through Voice-bot for a Micro-Finance Company was originally published in Chatbots Life on Medium, where people are continuing the conversation by highlighting and responding to this story.