Your cart is currently empty!

Blog

-

Nsfw image chat bot

I’m looking for a good free chat bot with images.

submitted by /u/Additional-Ad-5681

[link] [comments] -

Best free AI for the closest ‘Her’ experience?

What would be the best free setup for AI conversation that can be used without time limits? Something that can do every day natural conversations without long pauses in between, and be reasonably intelligent like the smallest Deepseek R1 distilled model for instance. It would be nice if it can do both Korean and English but just English is fine too. Do you think using an offline AI with Whisper(voice) and Piper (speech output) is a viable option?

submitted by /u/beethoven77

[link] [comments] -

Free unlimited AI GF site

Hi all, I built seduxai.com, you can get on there and have UNLIMITED FREE chats with a variety of different girls and guys about anything, from nsfw topics to something more casual and friendly.

NO FILTERS, none at all…chats are also 100% private and secure!

We do offer subscriptions, but they only offer premium features like sexting and phone calls. Messaging is free and will always remain unlimited!

Check it out and talk to a girl, or create your own and set their own descriptions and personality!

site: seduxai.com

discord: https://discord.gg/3PsQQ7sgdD

submitted by /u/Grizzled_Duke

[link] [comments] -

Platforms without stupid guidelines

Would anyone know of any character chatbot platforms or projects that do not have guidelines? Unfortunately, I have come to realize that these guidelines, in addition to limiting creative freedom, leave it to the discretion of devs and moderators to delete our bots. I’m not talking about images of criminal pornography or explicit gore, I understand that this really shouldn’t be allowed. But the rest, as it is minimally NSFL, already meets the damn guideline of these unfortunate people. So… does anyone know if there is a really free platform? Maybe something outside surfafe?

Lately I use Chub AI, it’s the freest one I’ve found so far, but idiots insist on deleting my bots just because the image seems too realistic, and it’s not even NSFW.

submitted by /u/Reptile_111

[link] [comments] -

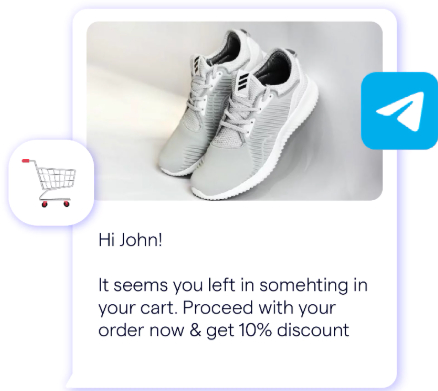

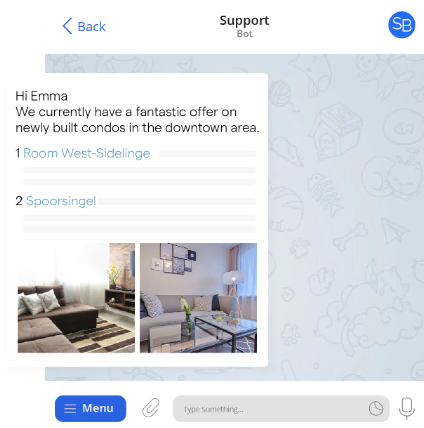

Telegram Chatbots: Are They a Good Fit for Your Business?

Telegram chatbots are rapidly gaining traction, with over 1.5 million bots already created. As one of the fastest-growing messaging platforms, Telegram boasts a user base exceeding 550 million globally, offering businesses an unparalleled opportunity to engage with their audience effectively.

In an era where customers prefer direct communication, research from Social Media Today reveals that 67% of customers favor messaging apps over social media for interacting with businesses.

Moreover, eMarketer projects a 27% increase in chatbot adoption on messaging platforms this year alone, as more businesses recognize their value.

From automating conversations to launching promotional campaigns, Telegram chatbots allow you to connect with your customers on a platform they already use. But is it the right choice for your business?

This article explores the key benefits, functionalities, and strategies for implementing Telegram chatbots effectively.

What is a Telegram Chatbot?

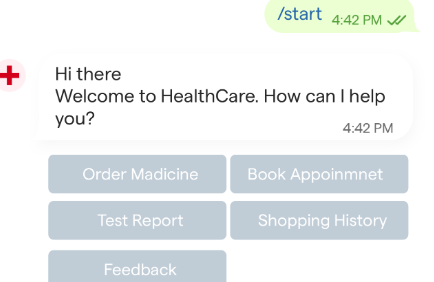

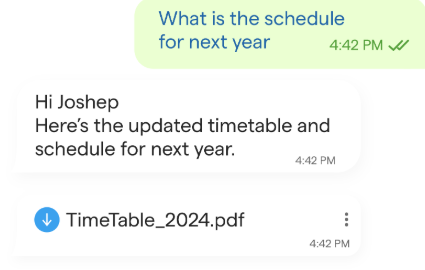

A Telegram chatbot is a software program that interacts with users via the Telegram messaging platform. These bots leverage artificial intelligence (AI) to understand user queries, provide information, and perform specific tasks.

Businesses can use Telegram chatbots for a variety of purposes, such as automating customer service, streamlining sales processes, and delivering marketing campaigns. Bots can operate in both one-on-one chats and group conversations, offering versatility and scalability for businesses of all sizes.

Key Benefits of Telegram Chatbots for Businesses

Here are some of the key benefits businesses can get from using a Telegram chatbot:

1. 24/7 Customer Support

Telegram chatbots ensure that your business remains accessible at all hours. They can provide instant answers to frequently asked questions, troubleshoot issues, and guide customers through their inquiries, enhancing customer satisfaction.

2. Improved Efficiency and Productivity

By automating repetitive tasks like responding to inquiries or processing orders, chatbots free up your employees to focus on more complex responsibilities. This streamlining of operations can significantly boost productivity.

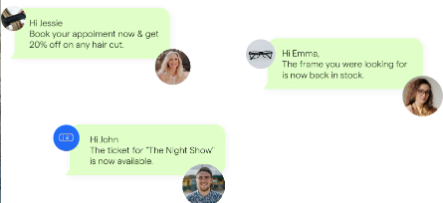

3. Personalized User Experiences

AI-powered chatbots can analyze user behavior and preferences to offer tailored recommendations and services. This personalization creates a more engaging and satisfying user experience, increasing customer loyalty.

4. Cost Savings

Implementing chatbots reduces the need for additional customer support staff. Their ability to handle multiple queries simultaneously results in fewer hires, lower operational costs, and the elimination of overtime pay for night shifts.

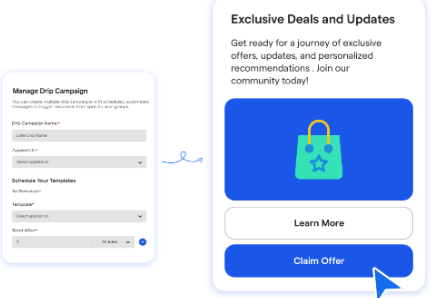

5. Marketing Automation

Telegram chatbots can send personalized messages, promotions, and updates to users automatically. This allows businesses to nurture leads, boost engagement, and drive sales without requiring manual intervention.

How Telegram Chatbots Work

Understanding how Telegram chatbots function is crucial for maximizing their potential. Here’s a simple breakdown of their operation:

- User Interaction: A user sends a message or command to the chatbot. The bot receives the input and begins processing it.

- Message Processing: The chatbot uses Natural Language Processing (NLP) to interpret the message, analyze intent, and determine an appropriate response.

- Response Generation: The chatbot generates a reply or performs an action based on its analysis.

- Response Delivery: The response is sent back to the user, who can then continue the interaction or take the desired action.

Chatbots can also integrate with APIs, databases, and machine learning models to expand their functionalities and handle more complex tasks.

Evaluating Your Business Needs

Before adopting a Telegram chatbot, assess your business requirements carefully.

1. Customer Support Needs

If your business handles a high volume of customer inquiries and struggles with delays, a chatbot can be a game-changer. It can help resolve queries efficiently, improving overall customer satisfaction.

2. Target Audience Analysis

Consider the preferences and habits of your audience. If they are tech-savvy and prefer digital communication, a Telegram chatbot will likely resonate with them.

Suggested Reading:

Build Your Own Chatbot on Telegram with Ease3. Scalability

If you anticipate growth in customer interactions, a chatbot can help manage the increased demand. It ensures your support processes remain scalable without compromising quality.

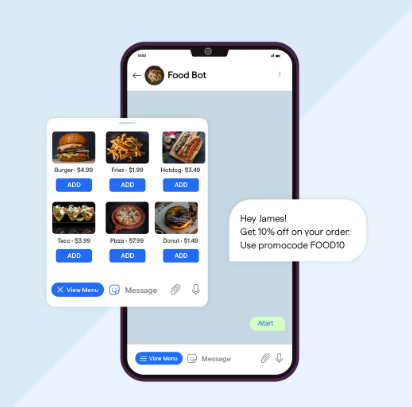

Potential Use Cases for Telegram Chatbots

Telegram chatbots can be tailored to fit various business functions. Here are a few use cases to consider:

Customer Service and Support

A chatbot can handle FAQs, provide order updates, and assist customers with troubleshooting issues. This not only improves response times but also reduces the workload on your support team.

Sales and Lead Generation

Chatbots can engage potential customers, qualify leads, and recommend products based on user preferences. They can even facilitate payments, simplifying the sales process.

Marketing and Promotions

Businesses can use Telegram bots to send targeted messages, promote upcoming events, and share exclusive offers. With multimedia support, chatbots can deliver visually engaging content to boost customer interest.

FAQ Automation

Transform your website’s FAQ section into an interactive chatbot. Customers receive instant answers to their queries, reducing wait times and improving their overall experience.

Limitations and Challenges

While Telegram chatbots offer many benefits, there are also some challenges to consider:

Language Barriers

Chatbots may struggle with understanding slang, complex queries, or poorly phrased messages. Regular updates and training can help overcome these limitations.

Complex Situations

For intricate customer issues, chatbots may need to escalate the query to a human agent. Ensuring a smooth transition is vital for maintaining a positive customer experience.

Suggested Reading:

Why BotPenguin is the best Telegram Chatbot Platform?Maintenance and Updates

To remain effective, chatbots require regular updates to match changing customer expectations and market trends.

Data Privacy and Security

Since chatbots collect and store user data, businesses must prioritize robust security measures to protect customer information and comply with data protection regulations.

Conclusion

Telegram chatbots present a powerful tool for businesses looking to improve customer engagement and streamline operations. With over 550 million users on the platform, it’s an opportunity too significant to ignore.

From providing 24/7 support to automating marketing campaigns, Telegram chatbots can transform how your business interacts with customers.

Platforms like BotPenguin make it easy to create and deploy Telegram chatbots without any coding expertise. Their user-friendly interface, pre-built templates, and seamless integration ensure your chatbot is up and running quickly.

As messaging apps continue to dominate, adopting a Telegram chatbot could be a pivotal step in enhancing your customer experience and driving business growth. Partner with BotPenguin to unlock the full potential of chatbots for your business today!

Frequently Asked Questions (FAQs)

What are the advantages of using a Telegram chatbot for my business?

A Telegram chatbot can streamline operations by automating repetitive tasks, enhancing customer support, and boosting user engagement. This leads to improved efficiency and higher customer satisfaction.

Which industries can benefit from implementing Telegram chatbots?

Telegram chatbots are versatile and suitable for various industries such as e-commerce, healthcare, financial services, and any sector that prioritizes customer service.

Are Telegram chatbots an affordable option for small businesses?

Yes, Telegram chatbots are cost-efficient, offering automation and scalability, making them a valuable tool for small businesses looking to optimize resources.

Can Telegram chatbots deliver personalized customer experiences?

Absolutely. By leveraging user data, Telegram chatbots can customize interactions, offering tailored responses and recommendations that enhance the overall customer experience.

Telegram Chatbots: Are They a Good Fit for Your Business? was originally published in Chatbots Life on Medium, where people are continuing the conversation by highlighting and responding to this story.

-

The CxD interview guide

The CxD Interview Guide

Get the most out of your conversation design job interview

Interviews go both ways. In these trying times of layoffs and non-existent UX job listings, it might seem a little pretentious to ask that candidates be “more picky” about their future employer, but the questions you pose the hiring team have 2 core functions.

The questions you ask show you’re:

- Vetting the role/team

- Implicitly telling your potential employer about your priorities (both in design and in your career)

A successful conversation design job interview is not just about impressing your potential manager— it’s also about ensuring that the company and team align with your career goals and values. Additionally, sussing out the role could save you time in the job search process (it’s not the kind of work you want to do, e.g. chat vs. voice) or set the correct level of expectations of what the day-to-day might actually be like (you’d be joining as a solo designer in a customer experience team vs. joining a design team).

The following is a list of questions you can bring to your next conversation design interview. Yes, these are some of the actual questions I asked during my interviews throughout my career thus far. I’ve separated them into 2 different lists per experience level, based on where I was mentally when I asked them.

Junior “baby” conversation designer questions

Description: This might be your first role in tech or your first interview for a design role. At this point in your career, you might pick opportunities based on how much support you’ll have to make the transition, hoping the delta between the job requirements and your technical skills is small.

- [Culture] What does it take to be successful here?

- [Culture] How much of this role will be remote in the future?

- [Product] How does this team define success according to its mission?

- [Product] How does this team ensure your experiences ship at quality?

- [Product] Does this org already have existing content guidelines or a content strategy in place?

- [Product] What direction does [company] want to take with its conversation design strategy?

- [Team] Are there other conversation designers on the team?

- [Team] Besides [x designer], will there be anyone else with experience in or a background in conversation design?

- [Team] What do you think would be some of the challenges for this position?

- [Team] Does this role offer any education budget? e.g. for online courses, conferences, workshops, etc.

- [Team] Is there anything else we haven’t covered that you think is important for me to know about this team?

- [Team] Is this team expected to grow in the future?

- [Bonus] Do you have any hesitations about my background?

Mid “sometimes senior” conversation designer questions

Description: This might be the point in your career where you want to weigh your options. Rather than trying to impress the company or focusing on the company culture as a whole, you may ask questions that’ll reveal how the team fits within the larger org structure and whether or not the day-to-day would feel rewarding for you.

- [Culture] Pros/cons: What is one challenge about working at this company? What is your favorite thing about working at this company? (I would ask this in a 1:1 if the interview format allows)

- [Product] What’s the long-term vision for this conversational application?

- [Tools] What platform do you use to build the bot/assistant?

- [Tools] What other kinds of tools do you use for work?

- [Team] How large would the design team be?

- [Team] Is there room for growth in this position?

- [Team] Can you share more about the onboarding and training process for new hires?

- [Team] Do designers on the team work through the entire design process? Or are there areas designers specialize in?

- [Team] Are designers expected to do their own research or is there a dedicated UX research team?

- [Team] How do designers typically give and receive feedback? Does your team run design crits?

- [Team] Can you share any recent or ongoing UX projects the team is working on?

- [Management] How would others describe your management style?

- [Management] When was the last time you supported a direct report’s growth?

Final Thoughts

Remember, you should always have something prepared when it’s your turn to ask the questions in an interview! Never stay silent. Try to be as candid as possible. If it’s not a good fit, it’s not a good fit, but at least you will have put your best foot forward.

✨BONUS✨

Happy to share that in a few days I will be giving a master class with the Interaction Design Foundation (IxDF)! Even if you can’t join us live, you can still watch the video on-demand. Sign-up page: https://www.interaction-design.org/master-classes/conversation-design-practical-tips-for-ai-design

The CxD interview guide was originally published in Chatbots Life on Medium, where people are continuing the conversation by highlighting and responding to this story.