We’ve finally made it through 2021, the year that drastically changed the way we work in offices. One thing that came with the pandemic was the transition of offices from on-site to remote. Many workplaces have shifted to a hybrid pattern since then.

So what does that mean for employers? Hybrid work means your employees can no longer rely on manual attendance or time tracking methods. Therefore, it’s time to leave behind old-school methods. Today’s world has changed, and most organizations now prefer having a reliable time tracking system for their employees.

In this article, we list down various employee time tracking apps on Slack. So let’s begin.

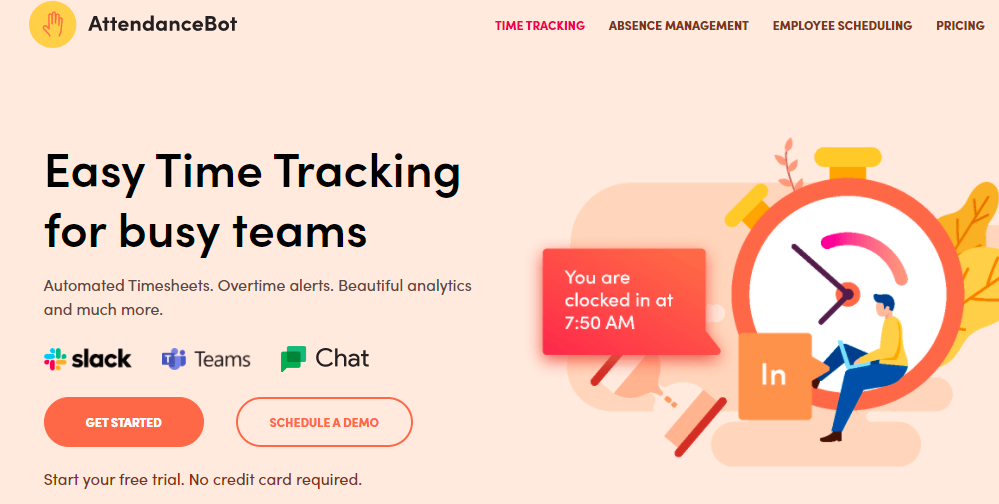

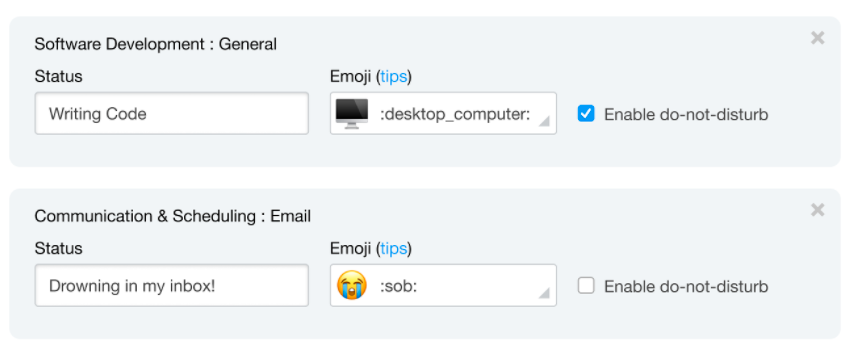

AttendanceBot

AttendanceBot is a powerful time tracking software for both small and medium-sized organizations. A great tool for remote and hybrid teams, AttendanceBot works from right inside Slack. The best thing is that it adapts to your team’s needs as you see fit. It can be a simple time tracking solution for smaller teams, while if you are a bigger team that prefers more robust features, it can use additional functionalities. With AttendanceBot, you can:

- Clock in or out easily without you worrying about tracking the time spent on a task/project each day

- Get reminders to punch in or out as your day begins or ends

- Generate timesheets within Slack

- Track billable hours with ease if you’re a freelancer or an agency

- Easily download Excel reports and get them delivered straight to you periodically

- Keep track of time spent on tasks

- If your team works in different shifts, you can manage their schedules and even customize shifts for each member

- Improve employee engagement and productivity

Trending Bot Articles:

Automated vs Live Chats: What will the Future of Customer Service Look Like?

Chatbot Vs. Intelligent Virtual Assistant — What’s the difference & Why Care?

Pricing

- AttendanceBot offers a 14-day free trial

- Starting from just $4 per user per month paid annually

- Available on iOS, Windows, Linux, Android, Mac

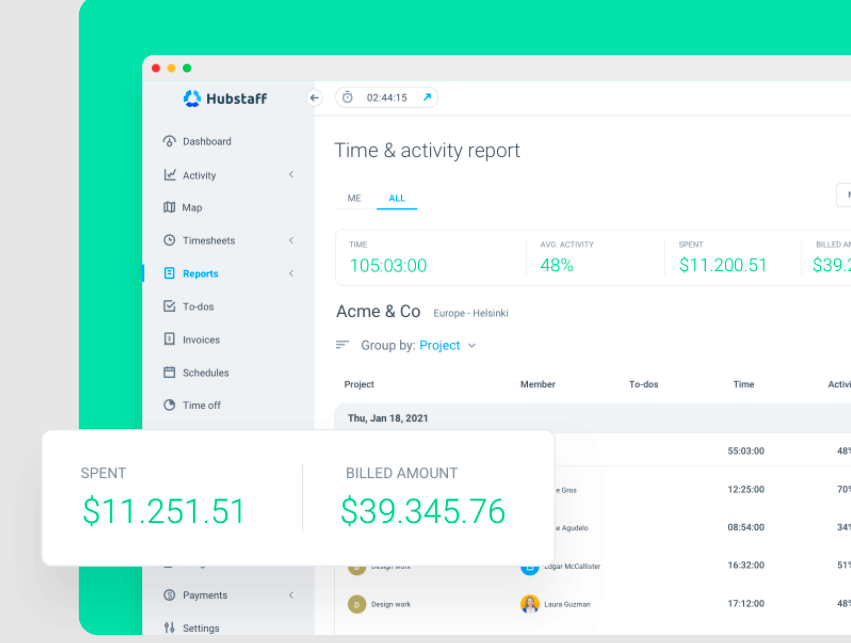

Hubstaff

Second, on our list is Hubstaff, an app that helps businesses of all sizes to:

- Track time

- Manage team productivity

- Manage their remote workforce

- Track what workers are doing with periodic screenshots

- Measure productivity by keystroke tracking

- Generate online timesheets and team reports

Pricing

- Offers a 14-day free trial

- Starts at $7 per user per month

- Available on macOS, Linux, Windows, ChromeOS, iOS, Android

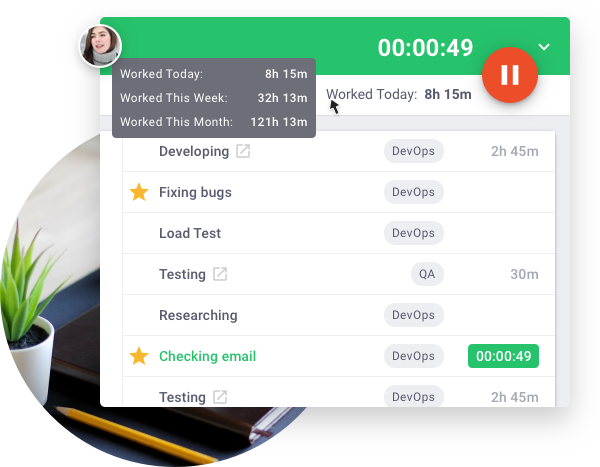

TimeDoctor

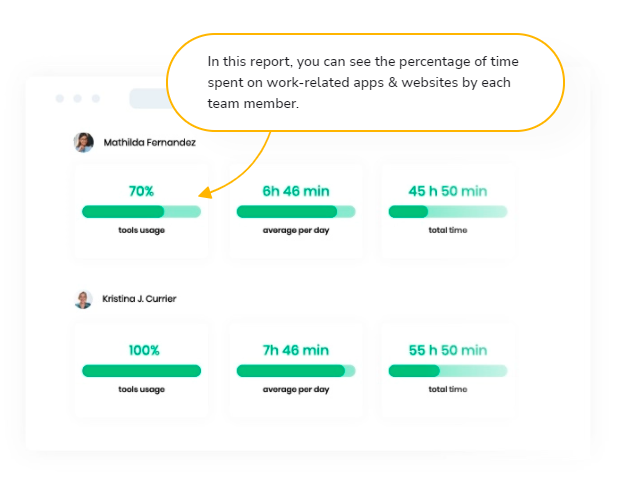

TimeDoctor is an employee time tracking app that helps you measure and analyze how your team spends its time. It also gives insights on who’s the best performer on the team. It syncs with more than 60 software. Additionally, its time tracking system records what web pages and apps each employee uses. Using this app, you can:

- Time spent on tasks and breaks

- Take automated screenshots

- Generate reports and timesheets

Pricing

- TimeDoctor offers a 14-day free trial

- Starting from just $7 per user per month

- Available on macOS, Linux, Windows, Android

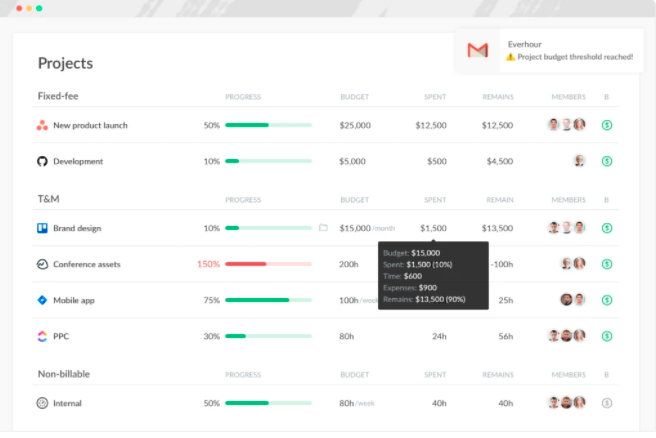

Everhour

Everhour is another employee time tracking software that helps users track time from the apps they already use. With this, Employees can start and stop their timer to record activities as they work. They can also log hours manually later. Everhour helps you:

- Track time spent by an employee on each task

- Track their breaks

- You can also use time tracking data to create invoices automatically

- Generate reports

Everhour also offers integrations with tools like Asana, Trello, Slack, Jira, etc.

Pricing

- Offers a free trial of 14 days

- Starts at $8.50 per user per month

- Available on macOS, Windows, Linux, Android, iOS

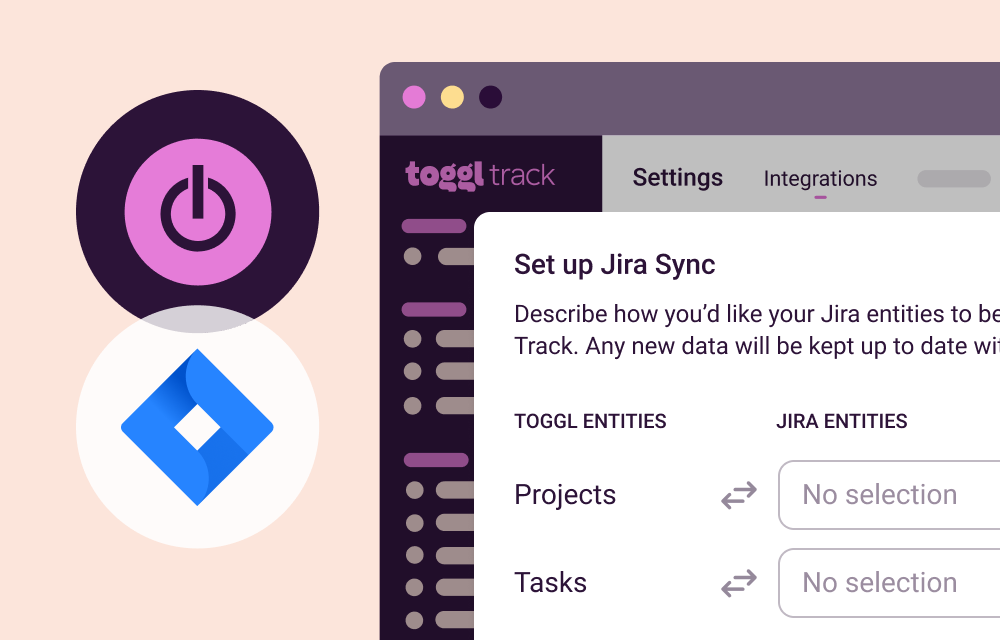

Toggl

Toggl is a time tracking app that helps users:

- Track time across the web app, desktop app, mobile app, or browser extension

- Auto track apps and websites that you use for more than 10 seconds

- Integrate your Outlook or Google Calendar into Toggl Track’s Calendar

- Set up Toggl Track to trigger time entry suggestions

Additionally, Toggl Track’s browser extensions allow you to start the timer directly from online tools like Asana, Todoist, Trello, and more.

Pricing

- Offers a free trial of 30 days

- Strats at $8 per user per month

- Available on Linux, iOS, Windows, Android, Mac

RescueTime

RescueTime offers its users an employee working hours tracker along with productivity monitoring. In addition, it also tracks the amount of time an employee spends on different websites and apps during their work hours. This helps an employer monitor their productivity and other things that they should be focusing on.

RescueTime is more of a productivity tracking app than a time tracking app, and that’s what makes it different. Another unique feature of this app is that it helps employers block distracting websites and apps like social media.

Pricing

- Rescue time offers a 14-day free trial.

- Starts at $9 per user per month

- Available on macOS and Windows

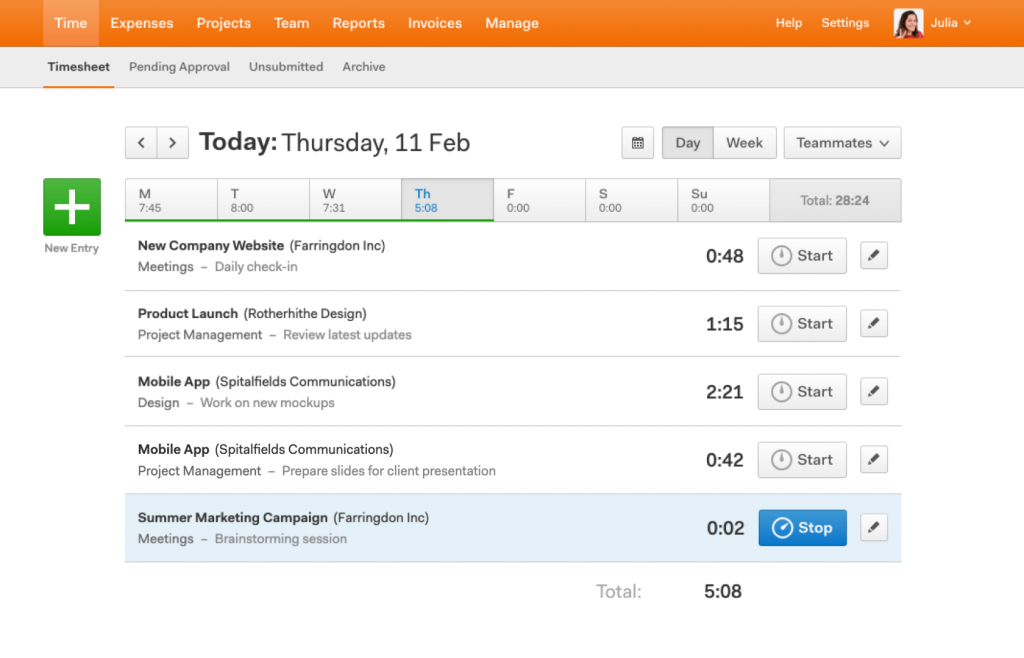

Harvest

Harvest is modern time tracking software that helps you:

- Track time from your desktop, phone, or favorite software

- Track time from the productivity tools you already use every day

- Automate reminders that encourage you to track time consistently

- Start a timer or log hours in Slack.

- Check a teammate’s Harvest timer, so you don’t interrupt them when they’re in the middle of a meeting or deep work.

- Get a quick visual overview of progress on a particular project and share it with the rest of a Slack channel.

Pricing

- Offers a free trial of 30 days

- Starts at $12 per user per month

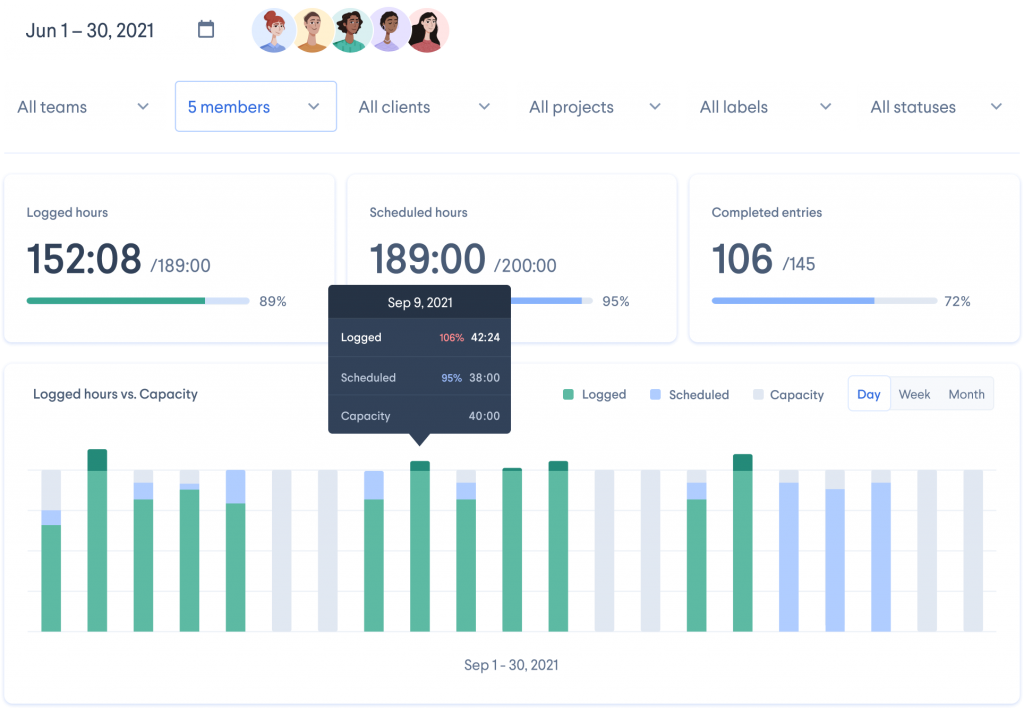

HourStack

HourStack is a weekly time allocation, time management, and time tracking tool for individuals and teams which integrates directly with Slack. It helps create effective time blocks that increase the team’s productivity.

With its easy-to-use options such as quick timers and drag-and-drop scheduling, this app makes time management fun for employees and time tracking easy for the management and HR. Use HourStack for:

- Time tracking

- Productivity management

- Timer & manual entry

- Project management

Pricing

- Offers a free trial of 14 days

- Pricing starts at $9 per month paid annually

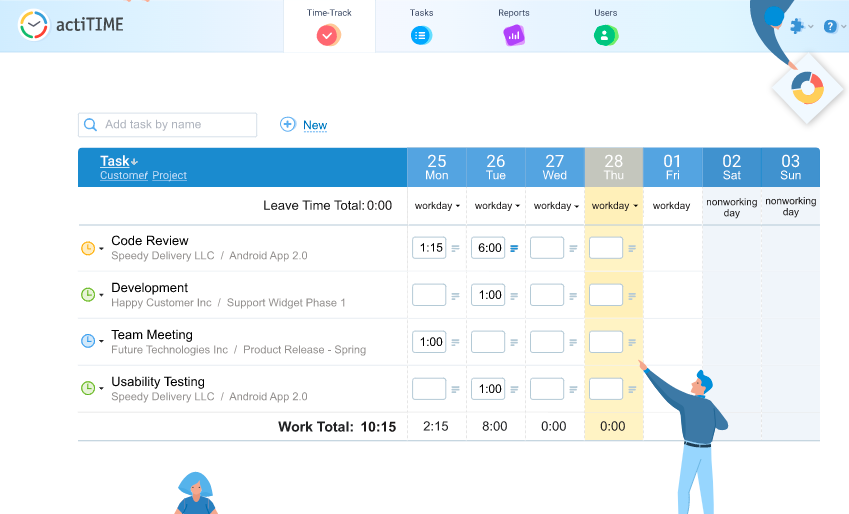

ActiTIME

ActiTIME is a time tracker that manually or automatically captures time in the browser or on the mobile. Users can also add notes to their time entries and set up individual work schedules for employees.

It also helps you generate automatic timesheets, adds over time, and manage payroll depending on the time spent. It also has a mobile app that helps you track time on the go, even in offline mode.

Pricing

ActiTIME’s pricing plan depends on the size of your team. Its free plan is limited to only three users. Other plans include:

- ActiTIME Online 1–40 users $7 user per month

- ActiTIME Online for 41–200 users $6 per user per month

- actiTIME Online for above 200 users is based on custom pricing

- actiTIME Self-Hosted plan is for $120 per user

TimeCamp

TimeCamp is another time tracking solution that enables its users to track billable work hours and monitor employee productivity. It offers more than 50 integrations that allow you to sync and import your task without switching context readily. It also offers you time tracking reports.

TimeCamp is suitable for employers looking for a blend of time tracking and payroll management and monitoring their employees’ productivity.

Pricing

TimeCamp offers a 14-day free trial and is free for one user for a month.

- The basic monthly plan costs $7 per user

- Pro monthly plan costs $10 per user

Whether you are a small organization or a big one, you must choose a time tracking app that suits your unique needs. Before you decide on investing in a time tracker app, keep in mind your team size, employees, and organizational needs.

Don’t forget to give us your 👏 !

10 Best Time Tracking Bots on Slack was originally published in Chatbots Life on Medium, where people are continuing the conversation by highlighting and responding to this story.