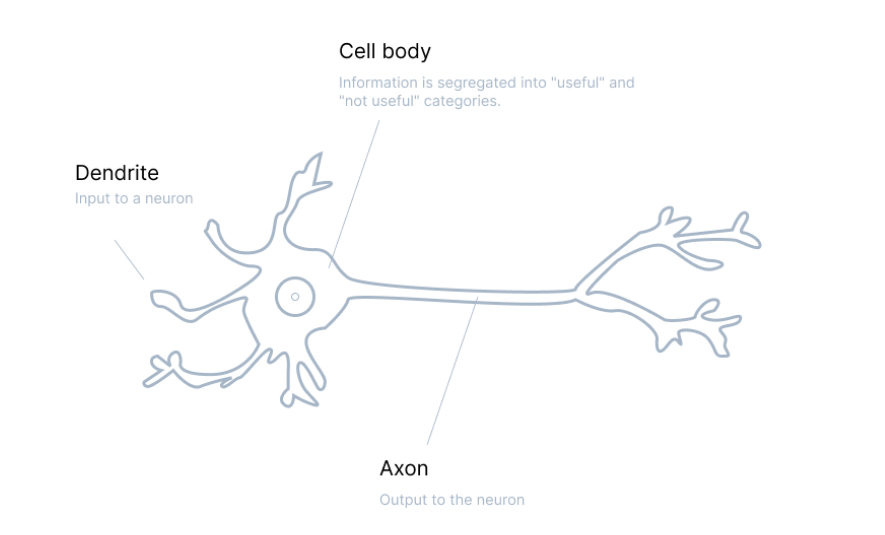

Activation does it means activating your car with a click ( if it has that ,of course) , well the same concept but in terms of neurons , neuron as in human brain ? , again close enough, neuron but in Artificial Neural Network (ANN).

The activation function decides whether a neuron should be activated or not.

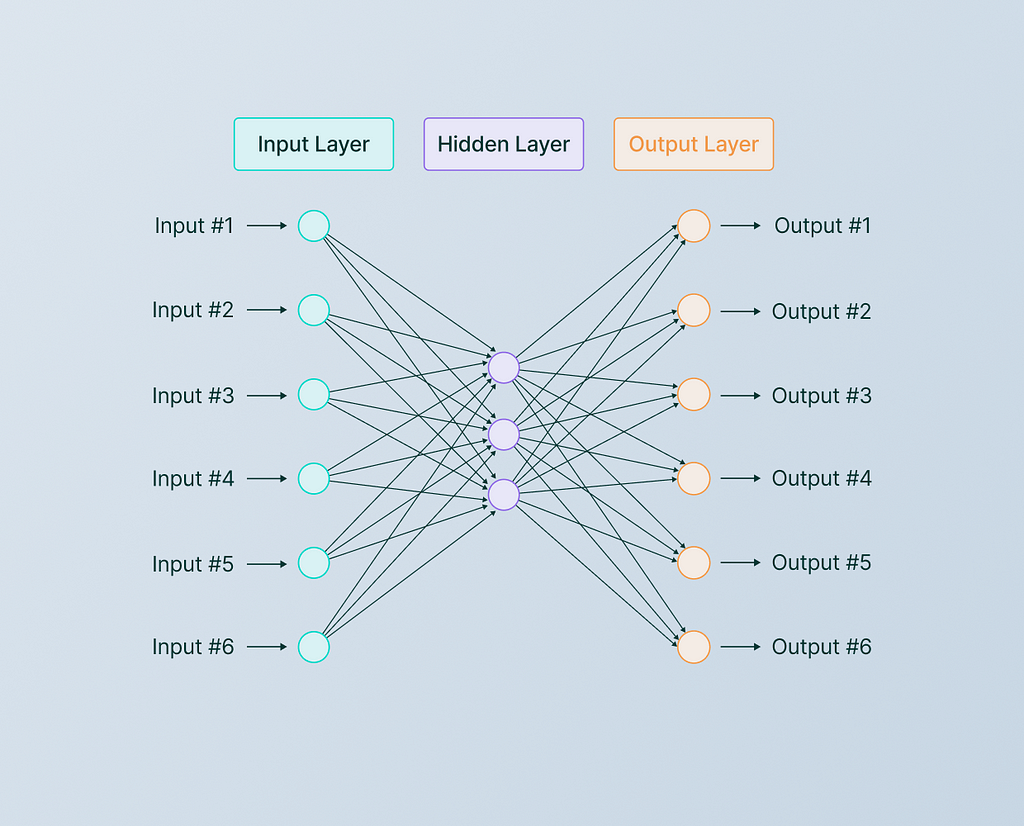

If you have seen an ANN, which I sincerely hope you do you have seen they are linear in nature, so to use non — linearity in them we use activation functions and generate output from input values fed into the network.

Activation functions can be divided into three types

- Linear Activation Function

- Binary Step Activation Function

- Non — linear Activation Functions

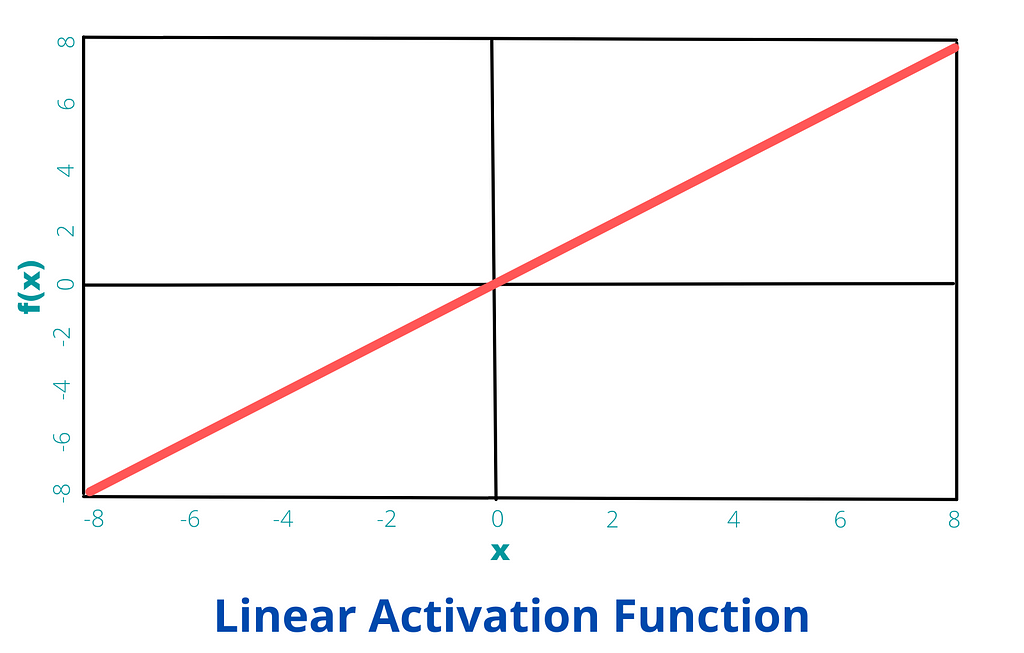

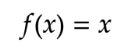

Linear Activation Function

It is proportional to the output values, it just adds the weighted total to the output. It ranges from (-∞ to ∞).

Mathematically, the same can be written as

Implementation of the same in Keras is shown below,

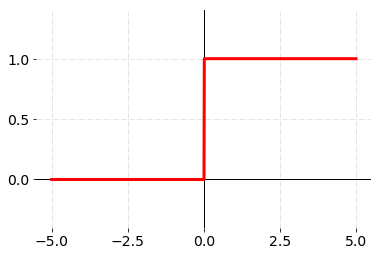

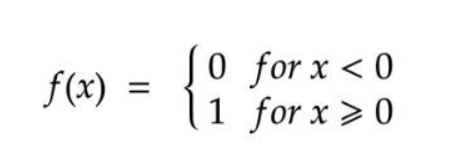

Binary Step Activation Function

It has a specific threshold value that determines whether a neuron should be activated or not.

Mathematically, this is the equation of the function

Implementation of the same is not present in Keras so a custom function is made using TensorFlow as follows

Non — Linear Activation Functions

It allows ANN to adapt according to a variety of data and differentiate between the outputs. It allows the stacking of multiple layers since the output is a combination of input passed through multiple layers of the neural network.

Various non — linear activation functions are discussed below

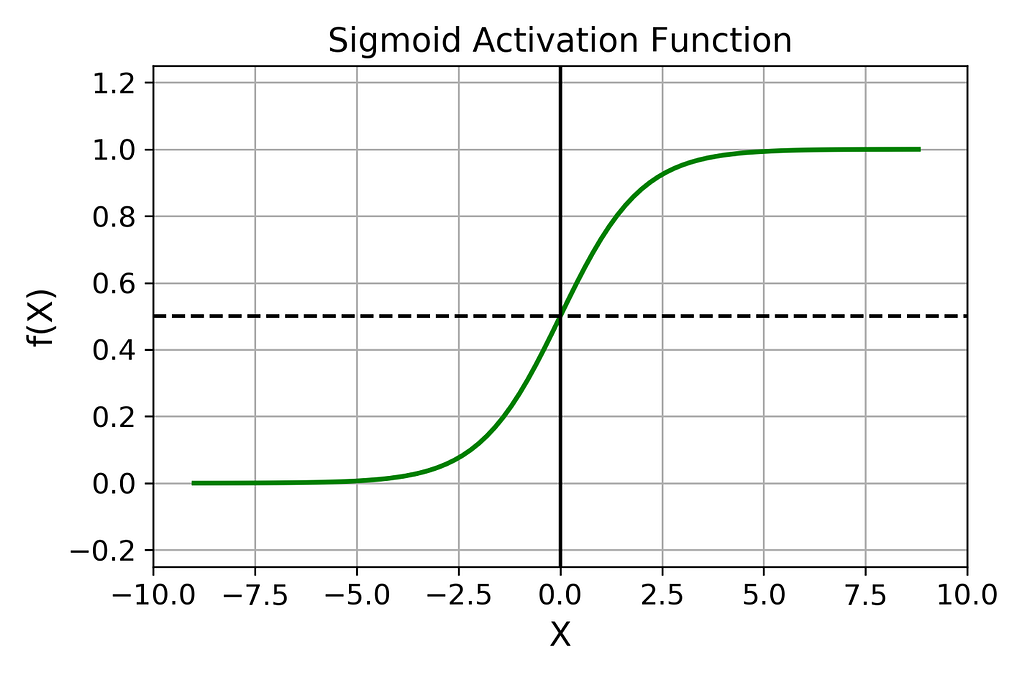

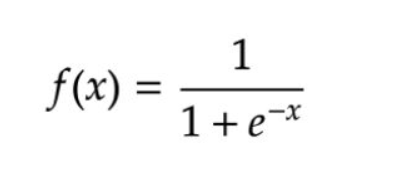

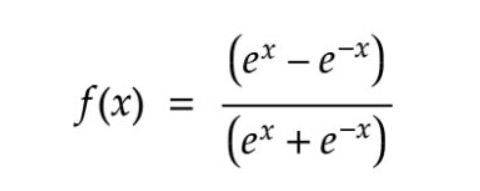

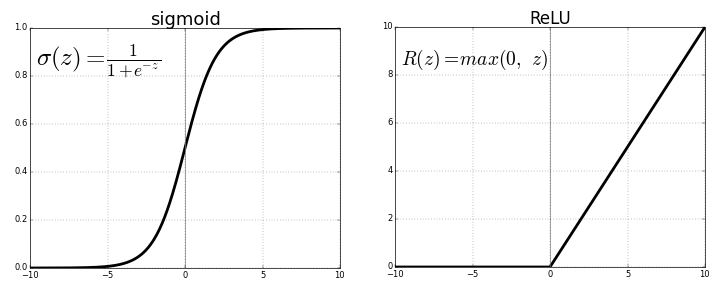

Sigmoid Activation Function

This function accepts the input (number) and returns a number between 0 and 1. It is mainly used in binary classification as the output ranges between 0 and 1 e.g. you train a dog and cat classifier , regardless of how furry that dog is it classifies it as a dog not cat , there is no between , sigmoid is perfect for it.

Mathematically, the equation looks like this

Implementation of the same in Keras is shown below,

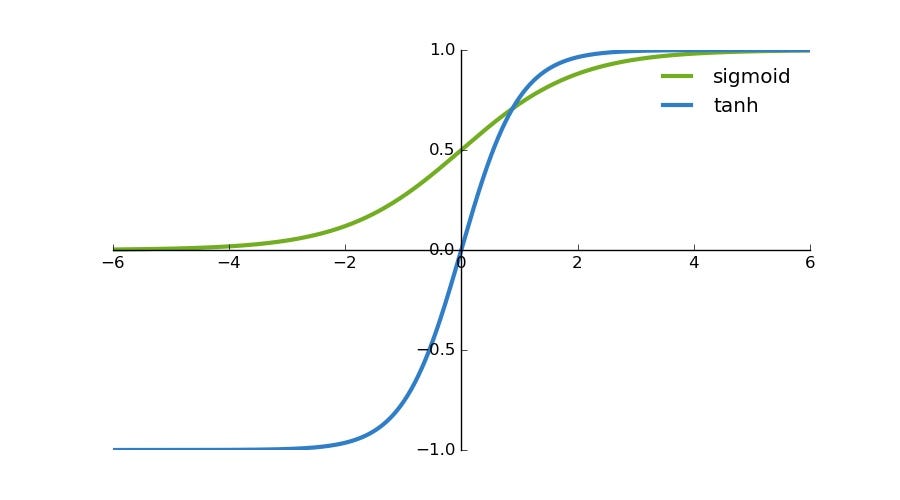

TanH Activation Function

This activation function maps the value into the range [ -1 , 1 ]. The output is zero centered , it helps in mapping the negative input values into strongly negative and zero values to absolute zero.

Mathematically, the equation looks like this

Implementation of the same in Keras is shown below

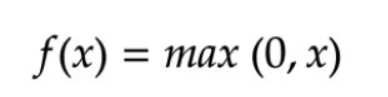

ReLU ( Rectified Linear Unit)

It is one of the most commonly used activation functions, it solved the problem of vanishing gradient as the maximum value of the function is one. The range of ReLU is [ 0 , ∞ ].

Mathematically, the equation looks like this

Implementation of the same in Keras is shown below,

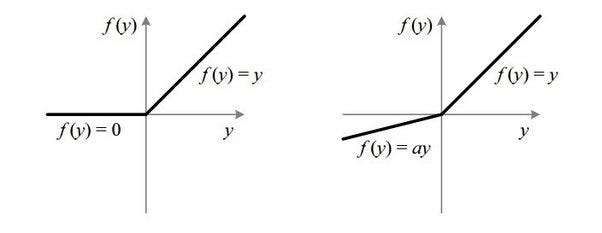

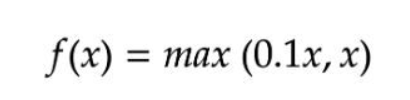

Leaky ReLU

Upgraded version of ReLU like Covid variants .. sensitive topic …ok fine .. getting back to Leaky ReLU , it is upgraded as it solves the dying ReLU problem , as it has small positive slope in negative area.

Mathematically, the equation looks like this

Implementation in Keras is coming right below

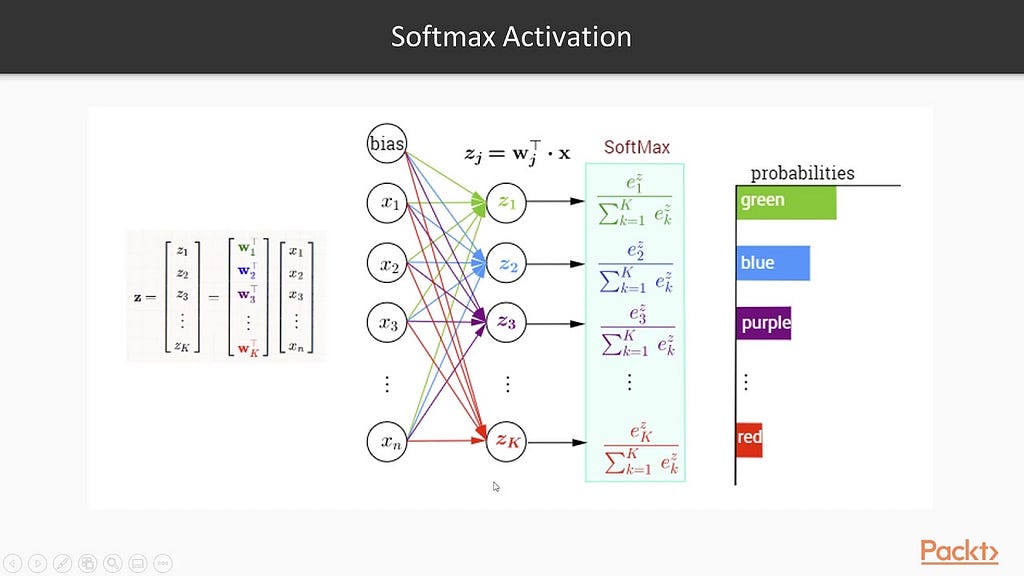

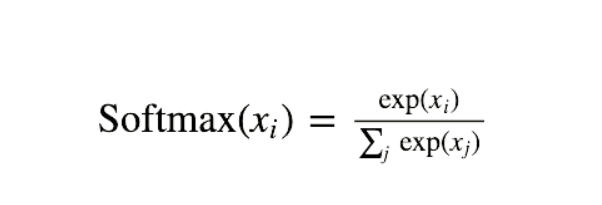

SoftMax Activation Function

Its a combination of lets guess .. is it tanh , hmm not quite , ReLU ? no or its leaky counterpart .. mhh not quite …. ok lets reveal it .. it is a combination of many sigmoid. It determines relative probability.

In Multiclass classification , it is the most commonly used for the last layer of the classifier. It gives the probability of the current class with respect to others.

Mathematically, the equation looks like this

Implementation in Keras is given below

The whole notebook containing all codes used above

If you wanna contact me , lets connect on LinkedIn link below

https://www.linkedin.com/in/tripathiadityaprakash/

Types of Activation Functions in Deep Learning explained with Keras was originally published in Chatbots Life on Medium, where people are continuing the conversation by highlighting and responding to this story.