With Examples from Google Analytics and Adobe Analytics

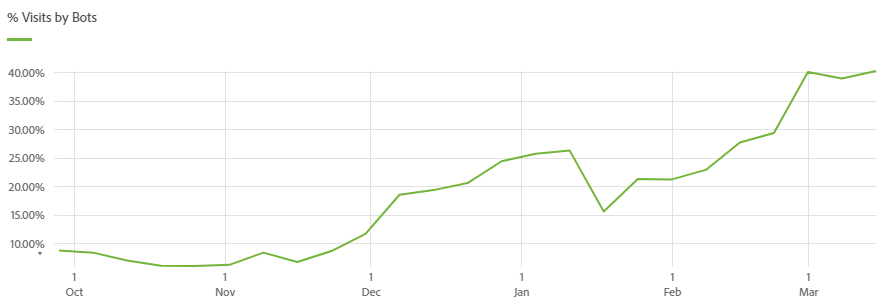

This is part I of the never-ending story on how to deal with Bots in your Analytics data. I review common, yet usually insufficient or even completely failing approaches. Why did I give up on AI-driven solutions like ReCaptcha, Akamai Bot Manager or Ad Fraud Detection tools? How good are the built-in Bot Filters? Should you at least maintain Bot Filters/Segments on top of GA views/AA Virtual Report Suites? Why does Server-Side Tracking exacerbate the Bot issues? I will finally give a peek at a client who saw Bot Traffic surging to over 40%, a case which made me reconsider entirely how to approach Bot Filtering.

The topic is as old as the world of Web Analytics: Bots (e.g. “crawlers” or “spiders”). They come without warning, wasting your time and money, and often causing spam in your data that is hard or impossible to repair.

Common Approaches to Bot Filtering

A: Web Analytics Tools’ built-in Bot Filters

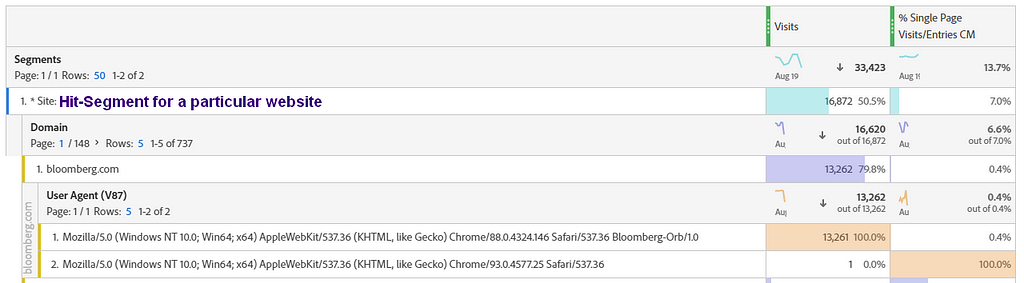

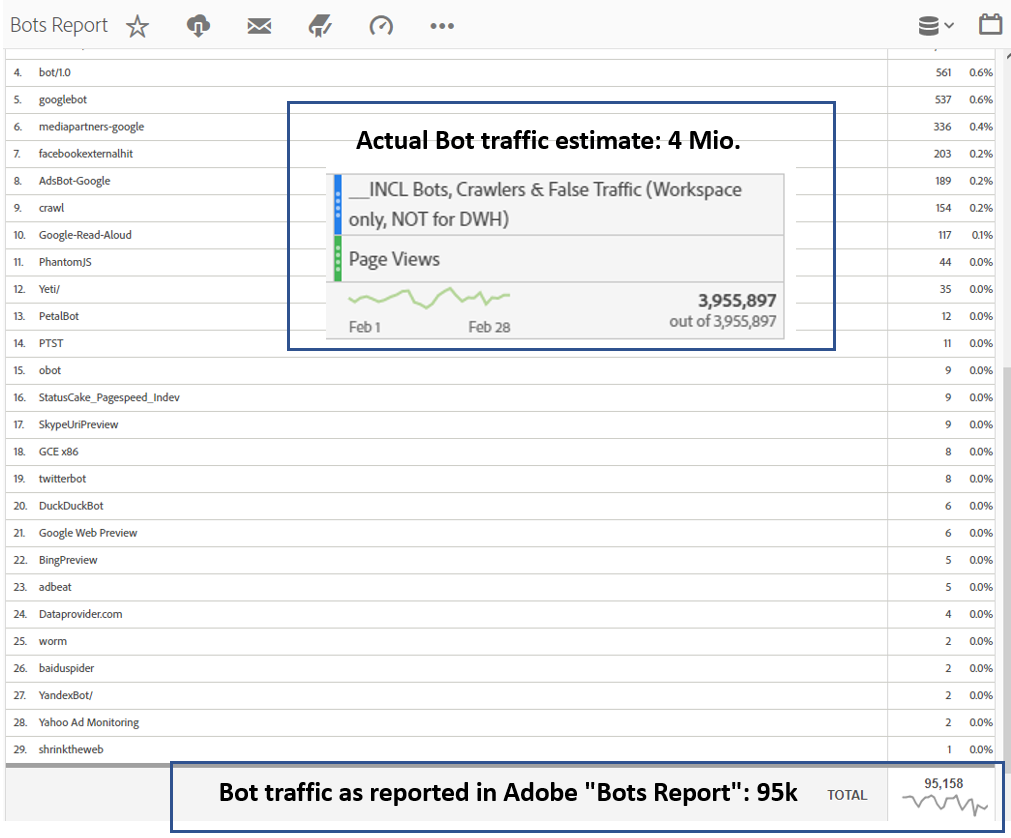

The “Bot problem” has intensified due to the growing traffic from JavaScript-executing and cookie-setting Bots out there. Nevertheless — or because of that growth—, no Web Analytics solution has gotten the Bots under control. Even though there are built-in Bot Filters in both Google Analytics and Adobe Analytics, they detect only a fraction of the actual Bots. If I e.g. look at the likely soon sunsetting “Bots Report” in the old Adobe Analytics interface, it tells me that a mere 95k of my Pageviews came from Bots, while in reality it was close to 4 million.

Let me be short for a change and note that the archaic “Filter by IP” or “Bot Rules” interfaces are a nuisance to maintain and not a sensible option anyway. Bot Rules can handle only User Agent and IP addresses as rules. IP addresses can’t be used for filtering if you obfuscate or truncate the IP, which everybody does these days in Europe. And there are only a few Bots that are recognizable through their User Agent.

Google Analytics has made matters worse by no longer giving you data on network providers. That data, like Adobe’s “Domain” dimension, used to be one of the best ways to identify and filter Bots (at least after the fact). That being said, GA’s “exclude bots and spiders” flag is not much better than Adobe’s built-in Bot Filter. If you compare a GA View with and without the Bots flag, the difference is usually tiny. The views I looked at showed a mere 1% of Sessions less with the Bot Filtering flag.

Google Analytics 4 has Bot Filtering applied by default, and you cannot remove it, nor is there any way to verify what it does (black box):

“At this time, you cannot disable known bot traffic exclusion or see how much known bot traffic was excluded.”

B: Pretend Bots are irrelevant

Life with Bots is a plague. Many try to ignore Bots altogether and pretend that they don’t have much of an impact on the data, because their traffic is too small to be relevant, or that it is constantly at about the same level, so there is always the same margin of distortion. In some cases, this is true. Bots don’t attack each site the same way. Yet more often, it is wrong. Bots can strongly affect your entire Conversion Rate (I remember my first GA client in 2018 having nearly 50% of their sessions generated by Bots). But more frequently, they mess up the data for specific reports.

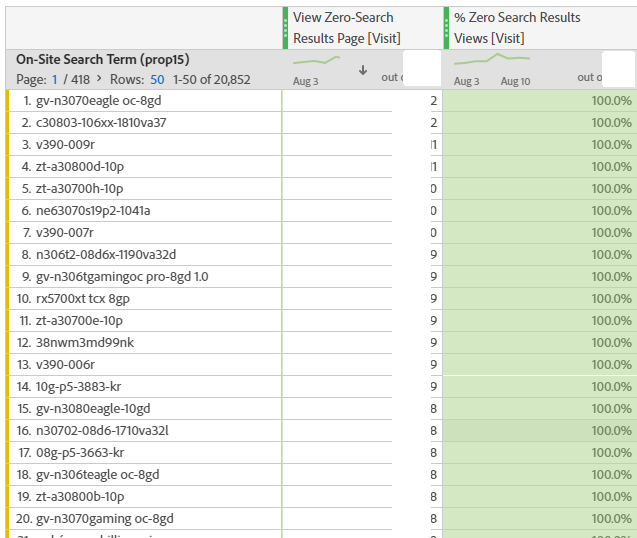

See this example of a comparably small Bot that spammed the site search with just about a thousand Pageviews, “typing” queries that only a bot can type, thus messing up our zero-results search terms report. This is a nuisance for the Search Management Team, because they prefer optimizing zero-result searches of humans:

Trending Bot Articles:

2. Automated vs Live Chats: What will the Future of Customer Service Look Like?

4. Chatbot Vs. Intelligent Virtual Assistant — What’s the difference & Why Care?

C: Apply and Maintain Custom Filters / VRS Segments

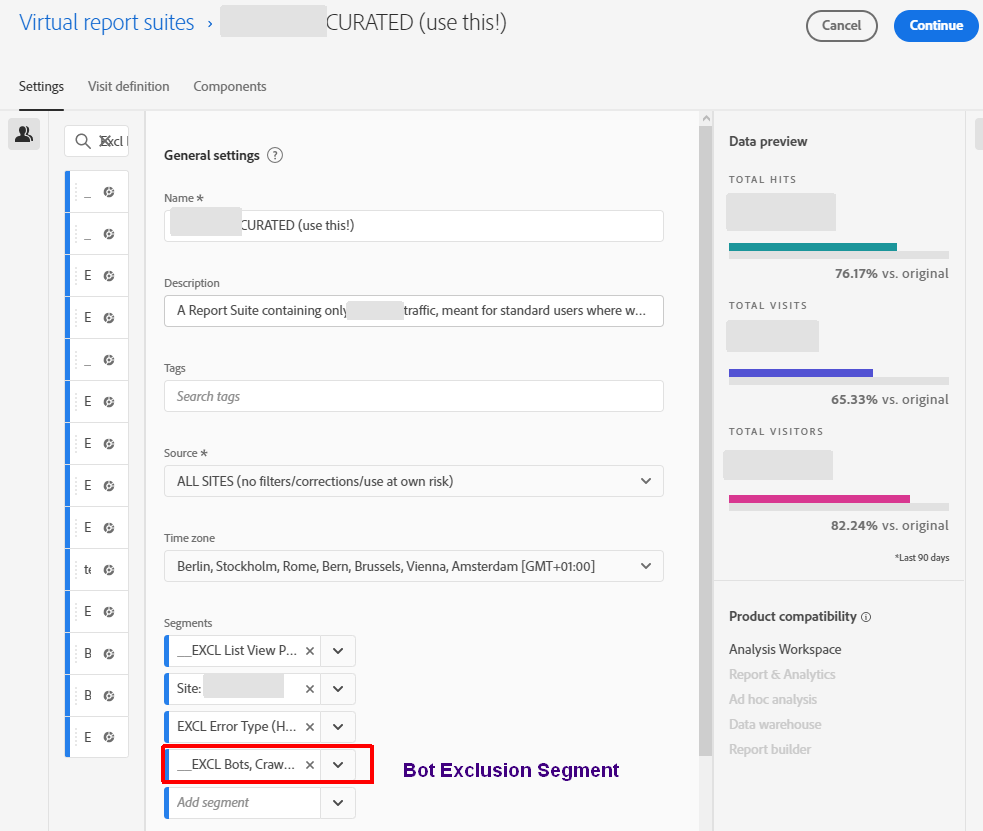

Others spend a lot of time creating and updating View Filters in GA which unfortunately still only filter out data when it’s already too late (not retroactively). A common practice in Adobe Analytics is to keep enhancing “Bot Segments” in AA and put those on top of your Virtual Report Suites (see chapter “why you should use Virtual Report Suites”). That filters out Bots everywhere and retroactively AND reduces complexity for your users because they don’t ever need to learn about Bots nor see any Bot Segments. However, those filters/segments grow and grow, slow down queries, are prone to errors and a pain in the ass to maintain. And they become impossible to maintain once you deal with a true Bot rush (see the client case further down). Still, you need this approach at least to some degree.

D: Specialized AI-driven Bot Detection Solutions

Others again try to piggieback on Bot detection solutions. There are the simpler ones that believe they can do it all just by analyzing mouse movements. And there are the known Bot eaters like ReCaptcha, Akamai’s Bot Manager or PerimeterX (who claimed to have an Adobe Analytics integration, but disappeared when asked about specifics). These solutions are usually based on a mix of behavioral algorithms and pattern matches: The algorithmic part checks for certain bot-like behaviors of a user (usually identified by an IP address), while the pattern matches check the UserAgent/IP address against long lists of known bots. If you integrate the solutions the right way, they can drop their verdict (usually a “Bottiness score” or a “Bot/no Bot” flag) back into the browser or via a request to your server-side Tag Manager, and thus make this information consumable for Analytics tracking logic.

In my experience, none of these solutions has shown to be reliable enough nor practical enough for the type of Bot filtering that is needed for Analytics (disclaimer: I have not tried ReCaptcha, but from reading about it, it will have the same issues). Why?

- If the solutions say someone is likely a bot, this is often true, but too often also not.

- Moreover, they miss out on way too many real bots.

- Most importantly, the AI part in them usually means they can’t make their behavior-based Bot verdict until after the first couple of Pageviews — because they first need to see some behaviour before getting a reliable score. So in the moment when our Tag Management System has to decide whether to track that first Pageview, the Bot detectors can’t tell yet whether this is a Bot or a human (or a zombie).

It’s not the fault of the solutions, they just don’t mesh with the way Analytics tracking works

I am not saying these solutions suck. It’s not their fault. But first, they are built to be Bot “Managers”, not Bot “Filters”. So they are built to prevent excessive load on your servers and fraud attempts, but often don’t mind if a slow-moving crawler checks out thousands of product pages. And second, Analytics solutions unfortunately don’t give you an option to say:

“Please delete everything we already tracked from this Bot, and also tell our Analytics vendor that they shall not bill the server calls incurred by this dude.”

So at the client we will take a closer look at in a bit, we could never use the signals from these Bot Detection solutions.

AI-driven Bot Detection is not as good as it sounds

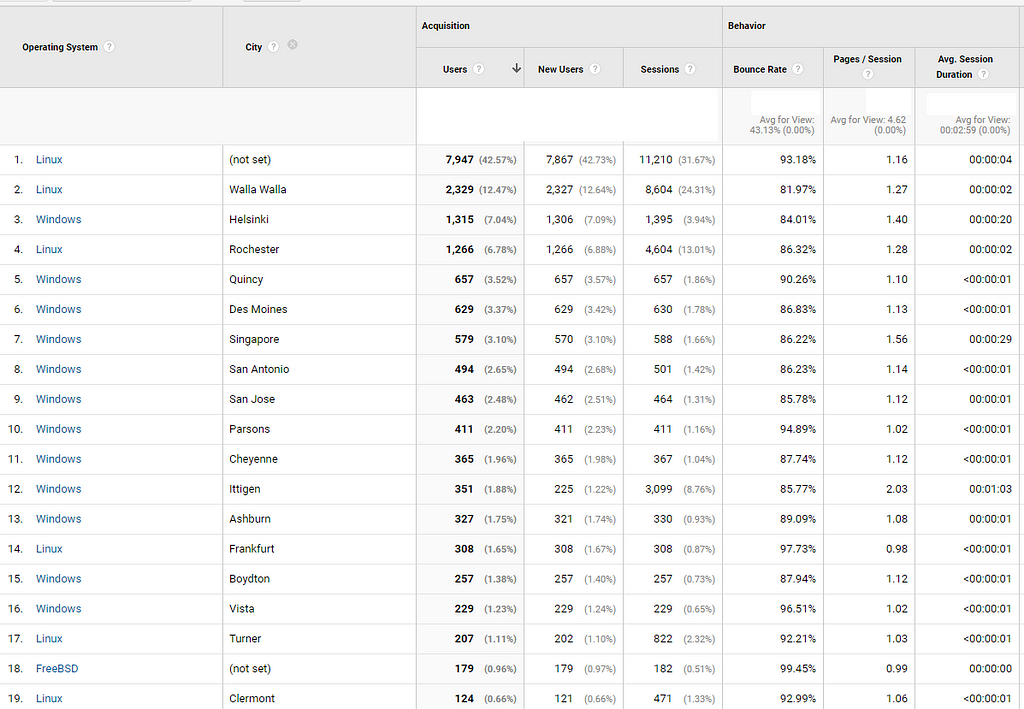

After defending these Bot Detection solutions somewhat, I have to lash out at them a bit. First, I was shocked how many really obvious and really traffic-heavy Bots (easily identifiable by their network domains) one particular expensive solution missed completely, even after days of the same IP addresses spamming the site. That was the nail in the coffin for my attempt to piggieback on them for Analytics-oriented Bot Filtering. Multiple improvement rounds did not change much. See some examples:

Special Case: Ad Fraud Detection Tools, or how to lose a a lot of money due to overzealous AI

The other case where I need to lash out at AI-driven Bot detection was a particularly costly one (for the client): If you are in “Performance Marketing for Bots” aka Social & Display Advertising (and Paid Search to some extent as well), you have probably run into Bot-induced ad fraud problems of massive scale at some point. Thanks to Augustine Fou (who owns an ad fraud Analytics tool himself) for an insightful presentation on the topic for Adam Greco’s SDEC (some parts of it are in this article, but better become an SDEC member for free). And of course, there is Tim Hwang’s book “Subprime Attention Crisis” on which there was a fabulous episode of the Analytics Power Hour in January.

Some of these Ad Fraud Detection tools block ads for users whom they believe to be Bots or any other kind of user that likely won’t buy (“window shoppers” etc…). For example, the solution the client tested (I won’t name it here) stopped showing Google ads to supposed “Bots/non-converters/enemies/etc.”. The goal was to have less clicks that end up not converting anyway — because you pay for each click after all. So they expected a decrease in traffic in exchange for an increase in Return on Ad Spend. And the tool vendors bragged about the millions they would save.

The solution did lower the traffic drastically, but also dragged down Revenue. The Conversion Rate and the Return on Ad Spend increased only a bit. After switching off the solution again, Revenue and Traffic both skyrocketed. It was clear now: the ad fraud detection blocked ads for way too many humans.

If you want to avoid such costly test drives, demand that the Bot Filter vendor does a “dry run“ where their tool runs, but does not really filter anything and simply marks users it would filter out. Then compare that sample to your Analytics, e.g. via a common user ID key or an IP address (Bot Filtering is one of the “legitimate uses” for tracking PII like the IP) to see what their tool would have filtered out. I demanded this, but got only about 5 IP addresses they would have filtered (of which 2 were from humans), and then I was shut out from the discussion. The test run started, and the client lost a lot of money.

So anytime “AI” is mentioned as a cure-all method, always be over-cautious, because usually the people offering this AI haven’t understood the complexity of the problem sufficiently yet.

But back to our main topic: How can we get those Bots out of the Analytics data reliably, and before they get into Analytics in the first place? Let’s introduce the client example that changed the way I approached Bot Filtering entirely.

The Client Case: An uncontrollable Bot Rush

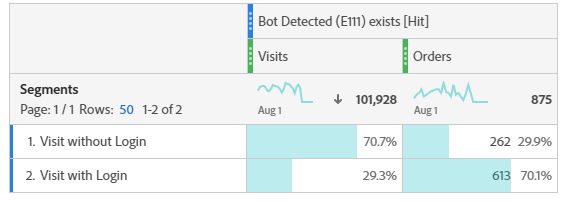

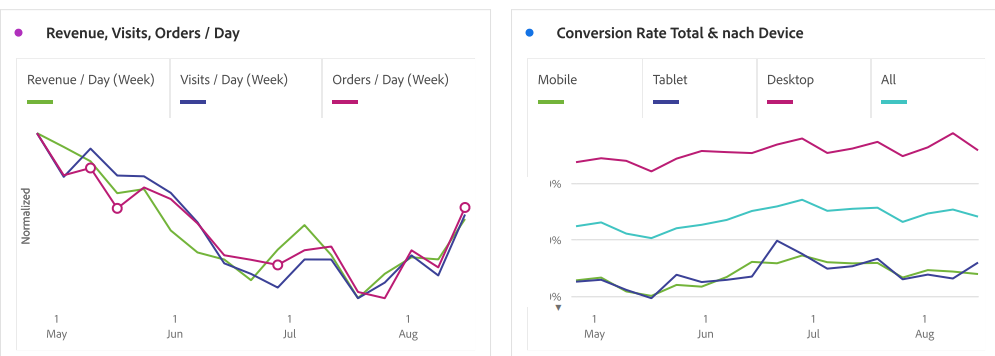

Approach C (maintaining segments on top of Virtual Report Suites) sums up my life with Bots as well. At least every month, someone reported that some on-site search report looked weird. Then we found out that some new crawler had taken to crawl all potential search result pages for products associated with brand “Mickey Mouse” and competitors. Another frequent case were freakingly low Product Conversion Rates for certain brands or products going down to near zero because of Bots.

The “solution” was usually to find a clearly identifying but not too complex (ideally one condition) criterion for that crawler/pingbot/whatever (usually the Network Domain was the best indicator), add that criterion to the Bot Segments, then tell people to reload the report, and then we could go back to actually useful work.

This worked decently well while the Bot traffic (Visits) was below 10–15% of the traffic measured by Analytics. Occasionally people asked why data from the past had changed, but it was not grave usually. I am saying “the traffic measured by Analytics” because many Bots are nice enough and do not execute Analytics scripts. Or they understand that this will make it easier for the website to eventually catch and block them. Or they are too stupid.

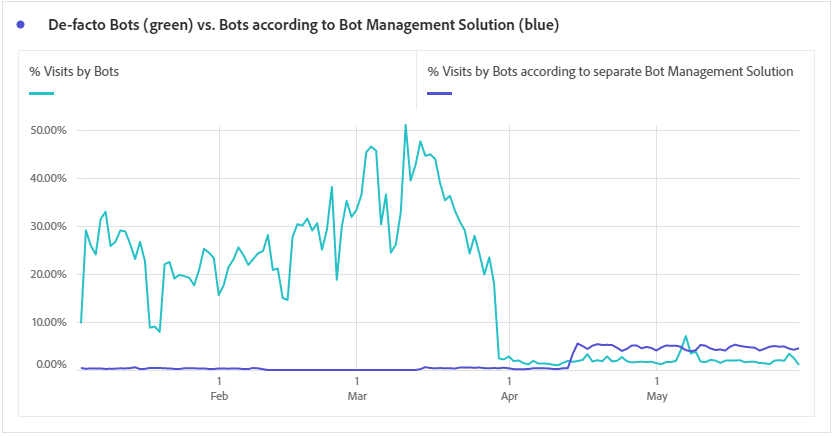

Over 40% Bots, the slippery Type

But in late 2020, some crazy Bot wave started, and the 10–15% went up to over 40% until March ’21. The Bots became like slippery worms, changing their network domain names and IP addresses all the time, so after finding and adding 100 new Bot domains on Monday to our Bot segment, we could add another 50 on Tuesday, Wednesday and Thursday. It was insane. The expensive IT-held Bot Management tool detected … wait for it … nothing!

Server-Side Tracking exacerbates the Bot Issues

Curiously, this problem only affected the server-side tracking technologies (actually Client-to-Server-to-Vendor). Why is that? To make a long story way too short, in server-side tracking, your browser never sends a request to “google-analytics.com/collect” or “…omtrdc.net/b/ss” etc… Thus, even if the Bot is one of those who do not want to get tracked by Analytics, it can’t evade tracking! So if you switch to Server-Side, get ready to deal with that additional Bot traffic.

But I digress… So we had this massive increase in Bot traffic, and it felt like shoveling water out of a house without a roof during massive rainfalls. Sysiphus live. We asked around whether IT or Marketing or agencies or anybody could help explain what was going on. Nothing relevant surfaced.

And … that’s it for part I. Read Part II to find out how we solved the problem by turning the concept of Bot Filtering upside down. Coming soon!

Don’t forget to give us your 👏 !

Bots & Analytics: Common Failing Approaches to Bot Filtering, including AI was originally published in Chatbots Life on Medium, where people are continuing the conversation by highlighting and responding to this story.