Your cart is currently empty!

What would you ask the Machine Learning model?

General Artificial Intelligence is still out of reach, but we already managed to produce a nice amount of Machine Learning models. They are widely adopted in many areas of our lives. For this reason, understanding decisions of blackbox models becomes critical and we are seeing increasing interest and number of tools in the field of eXplainable AI (XAI).

Don’t forget about human!

Yes, we should keep in mind that we explain models to humans. For that reason we build explanations which are visual or textual —it is more readable and informative than the raw numbers. But how do we know what should be explained?

At the moment we are given just a set of static tools. We need to make the process interactive. Imagine yourself trying to understand the decision made by a human expert. What would you do? Talk to them and ask questions! We need to apply the same pattern for predictive models. If we want to understand what people want to know we need to let them ask questions. Only having these questions we can think of the ways of answering them.

Human, please meet an ML model, ML model this is human

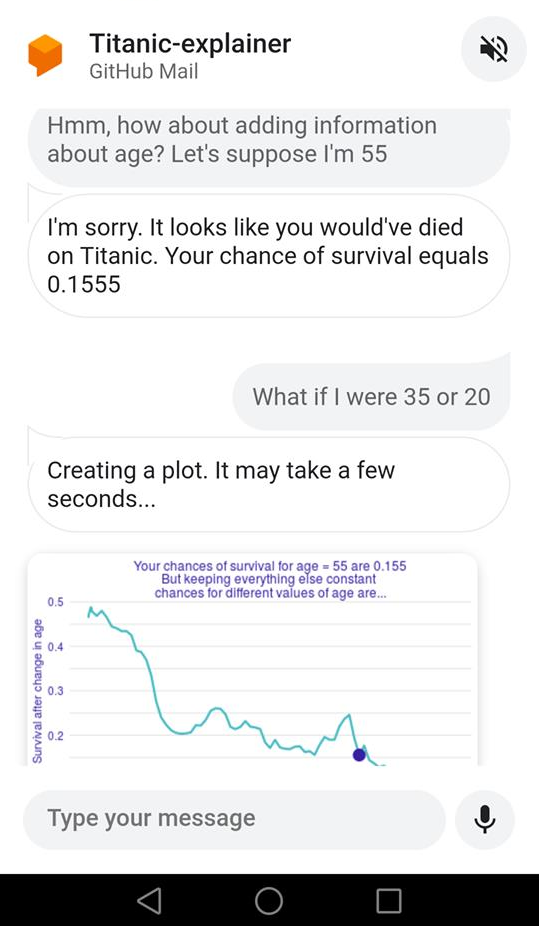

We build a conversational system (xaibot — chatbot for XAI) with a human on one end and the ML model on the other. This xaibot was named dr Ant (after doctorant — PhD student). It allows talking about Random Forest model predicting survival on Titanic. You can see an example conversation below.

DrAnt understands and responds to several groups of queries:

- Supplying information about the user (passenger), e.g. specifying age or gender. Alternatively, you can start as one of the main characters from the movie Titanic.

- Inference — asking about the probability of survival.

- Dialogue support queries — listing variables, restarting etc.

Trending Bot Articles:

1. The Messenger Rules for European Facebook Pages Are Changing. Here’s What You Need to Know

3. Facebook acquires Kustomer: an end for chatbots businesses?

Xaibot uses visual explanations from DALEX toolset. It answers what-if questions with Ceteris Paribus profiles and feature contributions queries with iBreakDown.

So, what people ask about?

They say Gentlemen do not read each other’s mail. I’m very sorry drAnt — we make an exception this time. We shared xaibot in this post and collected over 1000 human-model conversations.

There are certain repeating patterns in user queries. Here are the most frequent:

-

why — general explanation queries, such as ”why”,

”explain it to me”, ”how was that derived/calculated”. -

what-if — alternative scenario queries. Frequent exam-

ples: what if I’m older, what if I travelled in the 1st

class. - what do you know about me

- data-related questions — e.g. feature histogram, distribution, dataset size

-

local feature importance — How does age influence my

survival, What makes me more likely to survive -

global feature importance — How does age influence sur-

vival across all passengers -

how to improve — actionable queries for maximizing

the prediction, e.g. what should I do to survive, how

can I increase my chances.

Full results of the analysis might be found in this paper:

It’s just a beginning of a human-model interaction

We see people engaging in the conversation with the Machine Learning model. It lets them understand more than a single decision and model metrics such as accuracy. And for researchers in the field of eXplainable AI this method provides insight into human needs to be addressed with the explanation methods.

People say talking to plants helps them grow. Talking to Machine Learning models makes those blackboxes more transparent and that is a very desirable trait.

PS drAnt has its own phone number! Yes, it is possible to call the Machine Learning model! Not the most practical thing in this particular case though.

PS2 Let’s not disclose this number. We have already read drAnt’s messages. After all, even the bot deserves some privacy.

Don’t forget to give us your 👏 !

What would you ask the Machine Learning model? was originally published in Chatbots Life on Medium, where people are continuing the conversation by highlighting and responding to this story.